Time Series Model

If you recall back to our section on linear regression, we said the following about the regression assumptions, we said the following about the assumption that residuals are independent from one another:

“This assumption… is extremely important! This is because you can not defeat it by adding more data since the Central Limit Theorem requires samples to be independent of one another!”

“But what does it look like in practice for errors to be not independent? 99% of the time, it means that the data as a temporal element, i.e., stock prices. It is not reasonable to assume that the stock price of one day will not influence the stock price of the day after, the two are clearly related. If you try to run traditional regression on data with a temporal element, you’ll end up with a model that fits the data extremely well. This is because the model is overfitting the data by modeling the progression of time, rather than the data itself!”

If you have data with a temporal element, you need to switch to a time series model like ARIMA to get valid results.”

This ARIMA model is not a regression model in and of itself, however, it can be used to both:

Control for temporal effects so that standard regression can be used

Forecast data in the short-term

This section covers this model and it’s related regression model, but first, we need to introduce an important aid and concept that will make our explanation easier

The Lag Operator

The Lag operator, , is a notational aid that helps in describing time series data and models. When we have a temporal data set the lag function returns the data point places behind the current data point. So and . In general

The lag operator distributes through multiplcation, so for example,

It’s important to note we use in this section to mean the th entry of a single variable, not the th independent feature in the dataset like other sections. You can call “the value of at time .”

ARIMA: What does it mean and why should you care?

ARIMA stands for - Autoregressive - Integrated - Moving Average

ARIMA models allow us to model data where subsequent data points are not independent: the value of one data point depends on the value of previous data points. Again, we can use the example of stock prices: at the very least, the closing price of a stock one day affects the opening price of the stock on the next day, so we have a clear dependency.

Remember, we need this additional model because the GLMs we have seen so far require that data points don’t depend on one another.

Now, let’s break those terms down

Autoregression

Autoregression refers to the correlation between two or more subsequent datapoints in a time series. The most basic form of the ARIMA model is the AR model, which attempts to model autoregression by representing each data point as a sum (specifically, a linear combination) of the previous data points, plus some white (Gaussian) noise. is found by a computer search or defined beforehand. So the process that generates the data is assumed to be of the form:

It’s important to note that all . This is because values that the model predicts would blow up into infinity otherwise. This is important to maintain stationarity (see below).

Integrating Factor

Finally, the I in ARIMA stands for an integrating factor. This refers to fitting the ARIMA model to a difference of data points rather than the data points themselves. For example, an integrating factor of one would fit an ARMA model on the data set x_'{t}\prime = x_{t} - L\left( x_{t} \right), which has only data points. Likewise, an integrating factor of two would fit an ARMA model on the data set x_'{t} = x_{t} - L\left( x_{t} \right) - L^{2}\left( x_{t} \right), using only data points and so on. In general, an integrating factor of will change the dataset to only values where To explain why we’d want to include an integrating factor, we must talk about the concept of stationarity

Stationarity

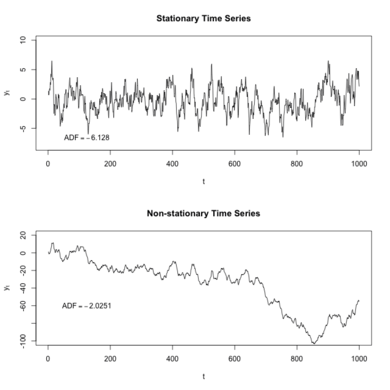

Stationarity refers to a situation where the structure of the data doesn’t change with time. It’s easiest to explain visually, below is a chart of two time series, one stationary and one not

As you can see, the stationary times series stays around its average, despite still fluctuating greatly from each point in time. Meanwhile, the non-stationary time series has treads, it stays constant, then goes downward slowly, then downward quickly, then upward.

While the time series on the bottom looks more realistic, since real-life data does often has trend, it is much more complicated to do analysis on. AR models require that the data set be stationary. Luckily, differencing via the integrating factor can “detrend” the data, allowing us to fit an AR model.

Moving Average

A moving average generally refers to a change in the mean of a time series over time. This feature of time series is what we refer to when we mean that there is an upward/downward trend.

The moving average model represents this by representing each data point as the sum of the errors of previous data datapoints.

Like in AR models, so that the model maintains stationarity. ## Bring It All Together The ARIMA model is a combination of all of the above elements. They are distinguished by the hyper-parameters , and , representing the hyperparameters for the autoregressive, integrating factor, and moving average components of the model. ARIMA models are denoted and have the form:

Broken down:

is the AR component

is the I component

is the MA component

Fitting and Evaluating ARIMA models

Fitting ARIMA models is a extremely complicated process since we have to fit 3 hyper-parameters and many parameters. Most methods use MLE, but the likelihood function for ARIMA model is extremely complicated and no reasonable interviewer would ask a question that would require you to know it.

When used for forecasting, various standard error metrics like mean error, mean absolution error, and mean squared error can be used to compare forecasts to observed data.

Seasonal ARIMA

A common feature of many time series is seasonality: threads that increase/decrease the average value of the time series at specific intervals that repeat every data points. This could be something like airline revenue increasing in the summer and holiday seasons.

To account for this, the Seasonal ARIMA model was developed to forecast data when seasonality was present. The SARIMA model includes four new parameters

- - The seasonality interval. If on monthly data, then the SARIMA model would include terms the quarterly lag of the current data points,

- - the AR component of seasonal time series, with parameters denoted by

- - the I factor of the seasonal time series

- - the MA component of the season time series, with parameters denoted by

SARIMA models are denoted as . So a would look like

From left to right, there are the AR component, Seasonal AR component, I factor, Seasonal I factor, MA component, and Seasonal MA component.

31%

CompletedYou have 43 sections remaining on this learning path.