Databricks Data Engineer Interview Guide (2025) – Questions & Prep Tips

Introduction

Databricks Data Engineer interview questions guide you toward mastering the skills that drive high-scale data infrastructure at one of the fastest-growing enterprise software companies. You’ll learn how to showcase your ability to optimize Apache Spark jobs, design fault-tolerant Delta Lake pipelines, and ensure your data architecture scales with real-time demands. These are the capabilities that power Databricks’ 140% net dollar retention and support over 11,500 global customers with industry-leading performance and reliability. As you prepare, we’ll help you translate technical contributions into business value—and yes, we’ll unpack Databricks Data Engineer salary later.

Role Overview & Culture

As a Databricks data engineer, your expertise in Python, SQL, Scala, and Spark positions you at the core of their unified analytics platform. You’ll work alongside data scientists and product teams to design scalable ETL systems, enforce governance policies, and build resilient pipelines using Delta Lake and Databricks Workflows. The culture emphasizes collaboration, continuous improvement, and hands-on ownership within a high-trust environment, reflected in its 4.3-star Glassdoor rating and 93% CEO approval.

Through code reviews, performance tuning, and workflow automation, you’ll enable customers to execute advanced analytics and machine learning at scale. You’ll also manage SLAs, deploy MLflow integrations, and contribute to processes that drive both innovation and enterprise-grade reliability.

Why This Role at Databricks?

Databricks empowers data engineers to lead complex, high-throughput workflows that underpin real-time decision-making and AI deployment for some of the world’s top enterprises. With its ARR reaching $3.04 billion by the end of 2024 and a continued 60% growth rate, the platform’s success depends on efficient, scalable data infrastructure—and that’s where you come in.

You’ll drive impact by optimizing compute resources, automating CI/CD pipelines, and designing robust Delta Lake architectures. These efforts ensure reliable data delivery and maintain performance across global workloads. If you’re ready to influence both product velocity and customer success with deep technical skill, this is your opportunity to shape the future of unified analytics.

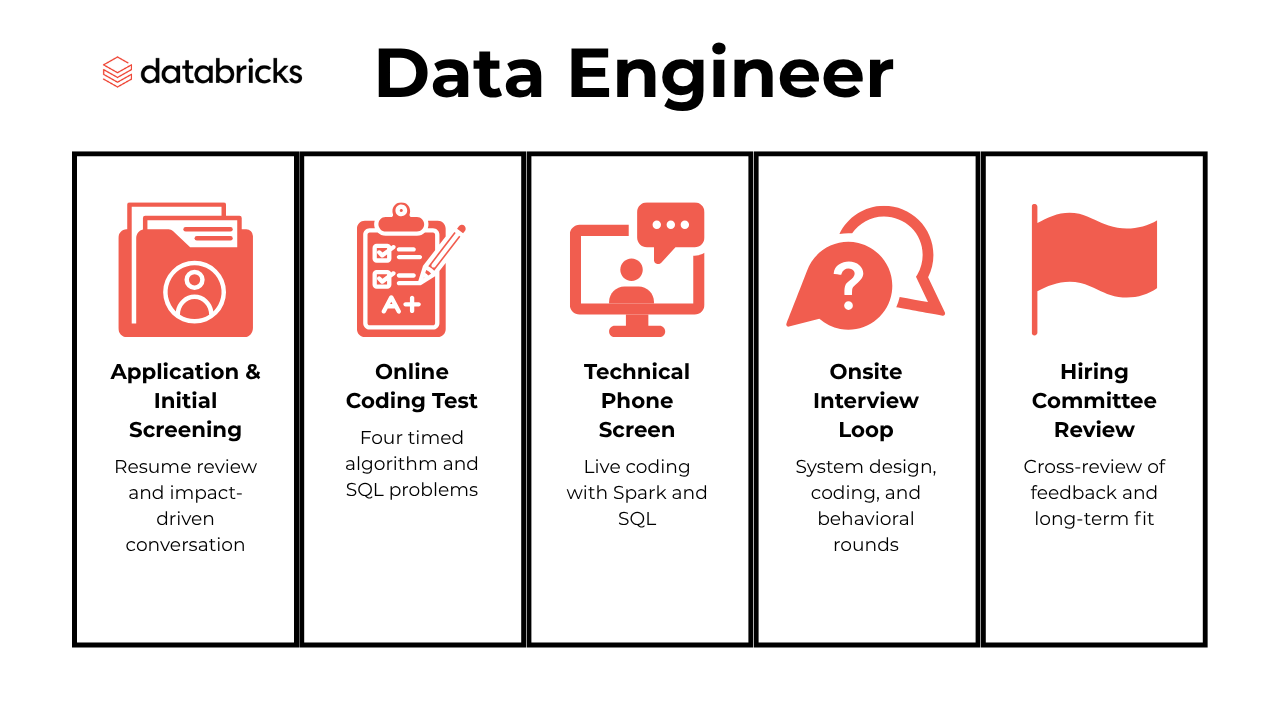

What Is the Interview Process Like for a Data Engineer Role at Databricks?

The Databricks Data Engineer interview process is designed to rigorously assess your technical expertise, problem-solving skills, and alignment with Databricks’ culture of innovation. The process is transparent and structured, with stages revolving around:

- Application & Initial Screening

- Online Coding Test

- Technical Phone Screen (60–70 minutes)

- Onsite Interview Loop

- Hiring Committee Review

Application & Initial Screening

Your interview journey starts with a focused resume review, where Databricks’ recruiters evaluate your technical depth in distributed systems, cloud platforms, and data engineering tools like Apache Spark and Delta Lake. During the 30-minute recruiter call, you’ll highlight impact-driven achievements, such as pipeline scalability or cost optimizations. This is your opportunity to align your goals with Databricks’ mission to unify data and AI. Behind the scenes, your background is weighed against current team needs and future product directions. Databricks values candidates who show both initiative and clarity in communication. This round sets the tone, so prepare to articulate your career story with confidence and precision.

Online Coding Test

The online coding test is a high-stakes, 60 to 70-minute live assessment that tests your ability to write efficient and correct code under time constraints. You’ll solve four questions covering medium to hard LeetCode-style algorithms, data structures, and advanced SQL involving window functions, joins, and nested queries. One or more problems typically reflect real-world data pipeline scenarios, such as optimizing transformation logic or handling distributed joins. Your code is benchmarked against historical performance metrics, including time to solve and computational efficiency. This stage previews the technical depth in later rounds and introduces the Databricks data engineering interview questions style you’ll encounter throughout the process.

Technical Phone Screen (60–70 minutes)

In this round, you’ll collaborate live with a Databricks engineer using an online IDE. You’ll be tested on graph traversal, concurrency challenges, or building fault-tolerant data flows using Spark-like APIs. Expect scenario-based SQL queries and discussions about optimizing storage formats like Parquet or Delta. Interviewers evaluate how you approach debugging and explain trade-offs. Communication counts just as much as code quality—being able to narrate your process while staying efficient is a key differentiator. This round is also used to assess whether you can handle the kinds of ambiguous, high-scale ETL decisions that are core to Databricks’ engineering ethos.

Onsite Interview Loop

This is the most comprehensive stage, typically lasting four to five hours. You’ll face experts across system design, coding, case analysis, and behavioral interviews. For system design, you’ll architect a scalable ingestion-to-lakehouse pipeline using Spark, Delta Lake, and cloud storage, addressing latency, schema evolution, and access control. In coding, you’ll solve concurrency and streaming logic problems. Case studies probe your ability to debug SLA breaches, tune job execution, or implement multi-tenant ETL frameworks. Behavioral interviews assess cultural alignment, collaboration style, and adaptability. If you’re preparing for Databricks interview questions for senior data engineer roles, expect deeper architecture discussions and more complex trade-off evaluations.

Hiring Committee Review

After your onsite, a cross-functional hiring committee reviews every interview scorecard, interviewer feedback, and any submitted references. Databricks uses this stage to calibrate not just technical skill but long-term potential. You’ll be evaluated against a consistent rubric to ensure fairness across candidates. The team prioritizes engineers who demonstrate systems thinking, problem ownership, and alignment with Databricks’ mission. Most candidates receive final results within five business days. By this point, you’ve already showcased your skill and adaptability across varied challenges. With clarity and transparency built into every step, this final review ensures your interview journey ends with constructive, actionable feedback, whether or not you receive the offer.

What Questions Are Asked in a Databricks Data Engineer Interview?

The Databricks data engineer interview includes technical, design, and behavioral questions designed to test your ability to build scalable systems, solve complex data problems, and align with the company’s engineering culture.

Coding / Technical Questions

In this section, you’ll explore sample Databricks data engineer interview questions that test your SQL fluency, data structure knowledge, and algorithmic thinking through real-world use cases and logic-heavy problems:

1. Write a query to get the total three-day rolling average for deposits by day

To calculate the rolling three-day average for deposits, first aggregate daily deposits using a WHERE clause to filter positive transaction values and group by date. Then, perform a self-join on the aggregated table to include deposits from the current day and the previous two days, and compute the average using AVG().

2. Find the average number of accepted friend requests for each age group

To solve this, use a RIGHT JOIN between the requests_accepted and age_groups tables to associate accepted friend requests with age groups. Calculate the average acceptance by dividing the count of accepted requests by the count of unique users in each age group, grouping by age group, and ordering the results in descending order.

3. Write a query to retrieve all user IDs whose transactions have exactly a 10-second gap

To solve this, use the LAG() and LEAD() window functions to calculate the time difference between consecutive transactions. Then, filter the results to find transactions with a 10-second gap and select the distinct user IDs in ascending order.

4. Write a function to compute the average salary using recency weighted average

To solve this, assign weights to salaries based on their recency, with the most recent year having the highest weight. Multiply each salary by its respective weight, sum the weighted salaries, and divide by the total weight. Round the result to two decimal places.

5. Given a dictionary, extract unique values that occur only once

To solve this, iterate through the dictionary values and count their occurrences using a list comprehension. Return a list of values that appear exactly once in the dictionary.

To solve this, decrypt the document by reversing the encryption formula for each letter. Then, count the occurrences of the target_word in the decrypted document by splitting the text into words and comparing each word to the target.

Data-Pipeline / Architecture Design Questions

These Databricks data engineering interview questions assess your ability to architect robust, fault-tolerant ETL pipelines, support evolving schemas, and optimize end-to-end data flows in production:

7. Design a data pipeline for hourly user analytics

To build this pipeline, you can use SQL queries to aggregate data for hourly, daily, and weekly active users. A naive approach involves querying the data lake directly for each dashboard refresh, while a more efficient solution aggregates and stores the data in a reporting table or view, updated hourly using an orchestrator like Airflow. This ensures scalability and reduces query latency.

To solve this, implement a Change Data Capture (CDC)-based, event-driven architecture. Use CDC agents to stream changes into message brokers, apply schema mapping and conflict resolution, and ensure idempotent processing for eventual consistency. Prevent update loops with metadata and enforce deterministic conflict resolution policies, while maintaining operational resilience and monitoring for data drift.

9. Design a scalable ETL pipeline for ingesting heterogeneous data from Skyscanner’s partners

To architect the ETL pipeline, use a modular design with components like orchestration (e.g., Apache Airflow), partner-specific connectors, a central message queue (e.g., Kafka), schema registry, transformation workers, and a unified storage layer (e.g., Elasticsearch). This ensures scalability, fault tolerance, and high data freshness while supporting diverse partner APIs and schemas. Monitoring, metadata management, and retry mechanisms further enhance reliability and operational transparency.

To architect this pipeline, use Apache Airflow for orchestration, Apache Spark for data processing, PostgreSQL for storage, and Metabase for visualization. Ingest raw data from internal databases and log files, transform and aggregate it using Spark, store the results in PostgreSQL, and expose curated metrics via Metabase dashboards. Airflow ensures automation, scheduling, and monitoring, while Metabase provides user-friendly reporting interfaces.

11. Redesign batch ingestion to real-time streaming for financial transactions

To transition from batch processing to real-time streaming, use a distributed log-based messaging system like Apache Kafka for ingestion, ensuring high throughput and durability. Implement a stream processing framework such as Apache Flink for real-time analytics and fraud detection, while maintaining exactly-once semantics. Store raw and processed data in scalable storage systems like Amazon S3 for compliance and historical analysis, and integrate real-time OLAP stores for low-latency querying. Ensure reliability through multi-region clusters, monitoring, and checkpointing mechanisms.

Behavioral / Culture Fit Questions

Below you’ll find sample Databricks interview questions and answers for data engineer candidates, focused on collaboration, ownership, communication, and alignment with Databricks’ values in a high-performance team environment:

For Databricks data engineers, this scenario can mirror the challenge of deploying pipelines under tight timelines while ensuring data integrity and system stability. It tests your ability to manage trade-offs between operational speed and long-term reliability. A strong answer would show your commitment to safety and process, along with your ability to communicate delays transparently and use time effectively.

13. Why Do You Want to Work With Us

This question is a chance to show how your goals align with Databricks’ mission in the unified analytics and AI space. Candidates should reference Databricks’ culture, open-source contributions, and innovation in data infrastructure. Mentioning interest in their collaborative engineering environment or their approach to lakehouse architecture can demonstrate thoughtful motivation.

14. What do you tell an interviewer when they ask you what your strengths and weaknesses are?

For data engineers at Databricks, this question is an opportunity to show self-awareness and a growth mindset. You might highlight strengths like optimizing Spark performance or building robust ETL frameworks, while also acknowledging areas where you’re actively improving, such as simplifying ML model deployment. Be specific and show how your experiences reflect a proactive approach to personal and technical development.

15. How comfortable are you presenting your insights?

Databricks engineers often present findings to product teams, data scientists, or leadership. Interviewers want to know if you can explain complex systems like Delta Lake optimization or cost-saving strategies in clear terms. Discuss how you tailor your messaging depending on the audience and your experience using tools like notebooks or dashboards to communicate insights.

How to Prepare for a Databricks Data Engineer Interview

To succeed in a Databricks Data Engineer interview, preparation must go beyond coding drills. You’ll need to master technologies core to their platform—Apache Spark, Delta Lake, MLflow, and orchestration tools like Databricks Workflows. Start by reviewing system design fundamentals with a focus on building real-time and batch data pipelines. Study data modeling patterns for Lakehouse architectures, including slowly changing dimensions, schema enforcement, and partition strategies. Deep dive into performance tuning for Spark, such as optimizing joins, managing shuffle partitions, and configuring autoscaling clusters in cloud environments.

Mock interviews are one of the most effective ways to improve. You may also use our 1:1 Coaching to simulate Databricks interview questions for data engineer candidates. These tools offer feedback on structure, clarity, and problem-solving speed. For even more accurate simulation, practice with concurrency scenarios and pipeline optimization cases.

If you’re preparing for Databricks interview questions for senior data engineer roles, your preparation should emphasize architectural trade-offs, cost-aware design, and leadership examples. Practice explaining how you’ve scaled data platforms, enforced governance, or led cross-functional engineering initiatives.

By combining targeted technical study with AI-assisted mock interviews, you’ll build the fluency, speed, and confidence to stand out across every stage of the Databricks interview process.

FAQs

What Is the Average Salary for a Data Engineer at Databricks?

Average Base Salary

Average Total Compensation

Do Senior Candidates Face Different Interview Questions?

Yes. If you’re interviewing at the staff or principal level, expect deeper architecture discussions and more strategic problem-solving. Databricks interview questions for senior data engineer candidates typically explore system scalability, cost optimization in cloud environments, leadership in cross-functional teams, and design patterns for secure multi-tenant data pipelines. You’ll also be evaluated on your ability to mentor others, drive long-term platform vision, and contribute to evolving data governance standards. While foundational technical rounds remain, the expectations shift toward demonstrating architectural ownership and product impact.

Where Can I Find More Databricks Data Engineer Discussions?

For real-world insights, strategies, and shared interview experiences, check out the Interview Query Databricks Data Engineer forums. You’ll find detailed breakdowns of recent candidate experiences, example questions from each interview stage, and tips for mastering the technical and behavioral aspects of the process.

Conclusion

Whether you’re targeting behavioral rounds or system design deep dives, mastering Databricks data engineer interview questions means preparing with structure, clarity, and real-world relevance. To accelerate your prep, explore our full Databricks data engineer questions collection to practice on real problems asked by hiring teams. If you want to build core skills in Spark, Delta Lake, and pipeline design, follow our curated Databricks data engineering learning path. And if you’re looking for inspiration, check out this success story from Jeffrely Li, who cracked the onsite loop and landed a senior data engineer offer. You’ve got the roadmap. Now it’s time to execute with confidence.