Adobe Data Scientist Interview Questions & Process Guide (2025)

Introduction

The Adobe data scientist interview isn’t just a test of whether you can crunch numbers. It’s designed to see if you can apply experimentation, modeling, and business intuition at scale. At Adobe, data scientists shape how millions of users interact with Creative Cloud and Experience Cloud, running A/B tests that influence product design, building models that personalize experiences, and surfacing insights that steer billion-dollar strategies.

In this guide, we’ll walk through the Adobe data scientist role and culture, the interview process, and the technical and business questions you’re likely to face. You’ll also get prep strategies, sample scenarios, and insider tips on how to stand out. With competitive compensation and exposure to one of the largest creative ecosystems in the world, this is a role that combines scale, impact, and career growth.

Role Overview & Culture

Life as a data scientist at Adobe means balancing experimentation with real-world application. One day, you might be designing a controlled test to optimize pricing; the next, you’re collaborating with engineers to productionize a content recommendation algorithm.

The culture leans bottom-up. Individual contributors are expected to propose ideas, own their projects, and see them through. Adobe’s “Adobe For All” philosophy creates a supportive and inclusive environment, while career paths span from leading experimentation programs to specializing in causal inference, forecasting, or ML Ops. If you thrive at the intersection of analytics and innovation, Adobe offers both the playground and the resources to make an impact.

Why This Role at Adobe?

Adobe’s scale is unmatched, analyzing creative usage across Photoshop, Illustrator, Acrobat, and more, while also powering enterprise-level insights through Experience Cloud. For a data scientist, that means every model you ship or experiment you run has an outsized influence.

Many data scientists expand into leadership tracks or transition into specialized domains like personalization, forecasting, or ML infrastructure.

Most importantly, Adobe invests in long-term learning. From internal mobility to access to global datasets, you’ll get exposure to both creative and enterprise use cases, sharpening your technical depth while broadening your business impact. For anyone looking to combine data science with large-scale influence, Adobe is a rare opportunity.

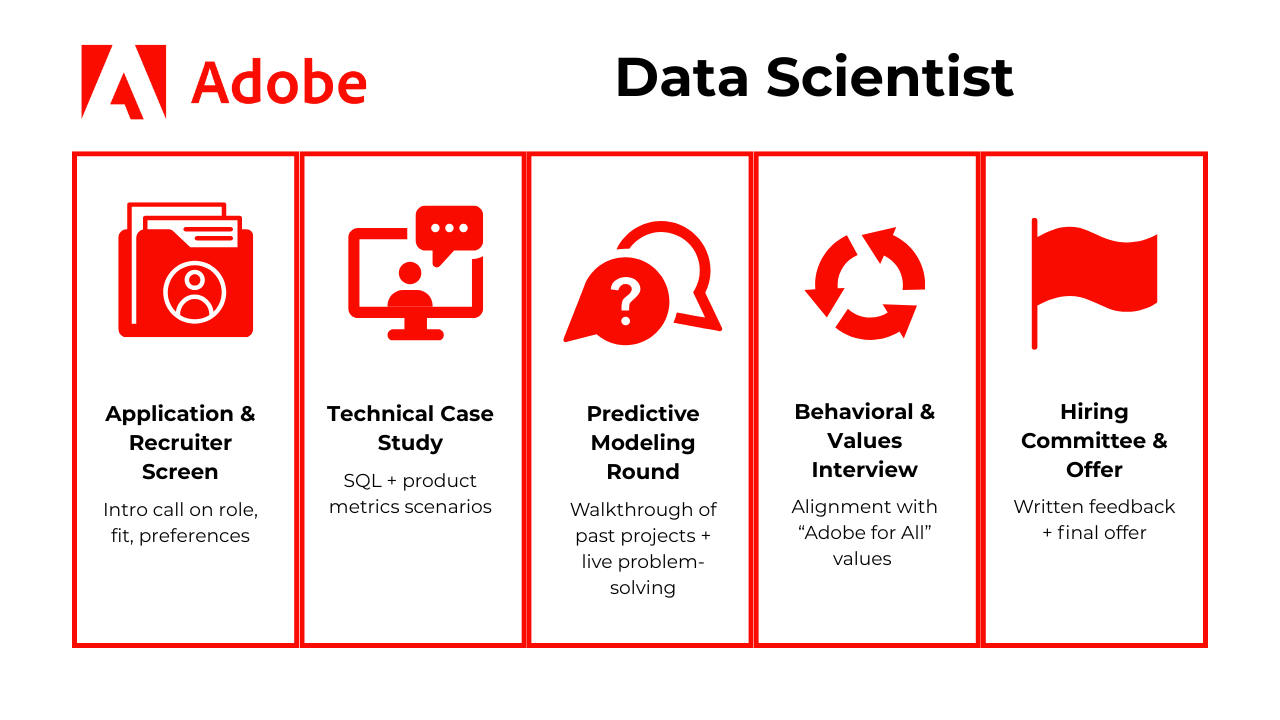

What Is the Interview Process Like for a Data Scientist Role at Adobe?

The Adobe data scientist interview process is crafted to assess your analytical thinking, modeling skills, and product intuition across several focused rounds. From SQL-heavy case studies to stakeholder-facing presentations, each step is designed to reflect the day-to-day of working on Adobe’s data science team. Here’s how the process typically unfolds:

Application & Recruiter Screen

This is your first touchpoint with Adobe. After applying, you’ll speak with a recruiter who introduces the role, clarifies team fit, and discusses location preferences. Expect a light walkthrough of your resume and a chance to highlight your technical toolkit (SQL, Python, R, ML frameworks). The goal here is to confirm alignment before you dive deeper.

Tip: Frame your experience in terms of impact (e.g., “optimized pricing model that boosted revenue by 8%”) rather than tools used. Recruiters remember outcomes.

Technical Case Study (SQL + Product Metrics)

You’ll be presented with a product scenario embedded with data challenges, such as interpreting funnel drop-offs, analyzing A/B test results, or segmenting churned users. This round blends SQL with product sense: you’ll need to query data correctly, but also explain why a particular metric matters and how it ties to business outcomes. Adobe looks for candidates who can connect raw data to real product decisions, whether that’s optimizing Creative Cloud pricing or measuring the impact of a new Experience Cloud feature.

Tip: Brush up on window functions and practice articulating why a metric matters (not just calculating it). Senior candidates may also be asked to propose a new metric that better captures user value. You can read this article about window functions and avoid common mistakes.

Predictive Modeling Round

In a one-hour session, you’ll be asked to walk through past modeling projects and explain your reasoning at each step: how you handled feature engineering, why you chose a particular algorithm, how you validated results, and what impact the model had once deployed. You may also be asked to sketch out a live solution for a problem like churn prediction or personalization. What matters most is not whether you remember every technical detail but whether you demonstrate end-to-end thinking: problem framing, model design, validation, and translation of results into business value.

Tip: Go beyond “I used XGBoost.” Be ready to explain why you chose it, what features mattered most, and how you validated impact against a business KPI.

Behavioral & Values Interview

The behavioral interview is where Adobe evaluates alignment with its “Adobe For All” values. Over the course of forty-five to sixty minutes, you’ll share stories about working cross-functionally, navigating conflict, and owning projects under ambiguity. Adobe places a premium on collaboration and inclusivity, so this is your chance to show that you can work effectively across product, engineering, and design.

Tip: Prepare one STAR story each for conflict resolution, cross-team collaboration, and driving an ambiguous project. Senior candidates should also highlight how they’ve mentored or influenced others.

Hiring-Committee Review & Offer

Your interviewers complete written feedback within 24 hours, which is then discussed in a calibration meeting. If the signal is strong, an offer is extended, usually within 5–7 business days of your final round.

Tip: If you feel you didn’t nail one round, don’t panic. Adobe looks at the overall trajectory of your interviews, not one misstep.

Behind the Scenes

Adobe’s bar-raiser system ensures a consistent hiring bar across teams by bringing in one interviewer from outside the immediate group. Interview feedback is submitted promptly (usually within a day) and evaluated collaboratively in a panel-style calibration. This process balances both technical signal and team fit.

Differences by Level

Interns and entry-level candidates often skip the predictive modeling round, instead focusing more on SQL and case interpretation. In contrast, senior candidates (e.g., Sr. data scientist, MTS 2) are typically asked to present a business-impact project they’ve led, highlighting their influence on metrics, strategy, and stakeholder alignment.

Knowing these stages transforms the Adobe data science interview from a mystery to a map.

What Questions Are Asked in an Adobe Data Scientist Interview?

Coding/Technical Questions

These Adobe data scientist interview questions cover real-world SQL and Python problems designed to assess your ability to clean, transform, and extract insights from large datasets. In Adobe data science roles, datasets often span millions of records, requiring both precision and performance.

Group a list of sequential timestamps into weekly buckets

Write a Python function using datetime slicing or pandas

resample()logic. Bucketization is useful when building cohort analysis or tracking retention patterns across time.Tip: Always specify the week anchor and timezone handling to avoid off-by-one issues with end-of-week boundaries.

Write a function that takes a sentence and returns all word bigrams

This Python string-processing task focuses on parsing and n-gram generation. It mirrors early preprocessing stages in text analytics and topic modeling.

Tip: Normalize case and strip punctuation before building bigrams; decide whether to include stopwords based on the downstream task.

Write a query to count users who made additional purchases

Leverage

GROUP BY,HAVING COUNT(*) > 1, and filtering for sequential actions. Purchase pattern recognition is core to customer segmentation models.Tip: De-dupe by order_id per day if cancellations/duplicates exist; for “additional” purchases, enforce a min time delta between orders.

Stem words in a sentence using the shortest root words from a dictionary

This problem blends trie-based matching and substitution logic. It’s useful for understanding stemming and vocabulary compression in NLP pipelines.

Tip: If performance lags, pre-sort roots by length and early-exit on first match; cache token→root mappings.

Identify the average number of swipes per session

Use SQL joins, filters, and

GROUP BYto aggregate session activity. It reflects usage telemetry processing in digital product experimentation.Tip: Define a session window explicitly (e.g., 30-min inactivity cutoff) and exclude bot/test traffic via a allowlist/flag.

Compute rolling 7-day active users (Python, pandas)

Useful for DAU/WAU monitoring and experiment guardrails.

import pandas as pd

# events: user_id, event_time (tz-aware), product

df = events.assign(date=events['event_time'].dt.tz_convert('UTC').dt.date)

dau = (df.groupby('date')['user_id']

.nunique()

.rename('dau')

.to_frame()

.sort_index())

dau['wau'] = dau['dau'].rolling(7, min_periods=1).sum()

Tip: For large tables, pre-bucket to daily uniques with a distributed job (e.g., Spark) before rolling windows in pandas or SQL.

7 . Sessionized conversion rate with window functions

Track whether each session converted and compute product-level CVR.

-- sessions(session_id, user_id, started_at, product)

-- events(session_id, event_time, event_type)

WITH conv AS (

SELECT

s.product,

s.session_id,

MAX(CASE WHEN e.event_type = 'purchase' THEN 1 ELSE 0 END) AS converted

FROM sessions s

LEFT JOIN events e

ON s.session_id = e.session_id

GROUP BY 1,2

)

SELECT

product,

AVG(converted::float) AS cvr

FROM conv

GROUP BY product

ORDER BY cvr DESC;

Tip: Guard against attribution leakage: constrain events to [session.start, session.end] and ensure test/control isolation if this feeds an experiment readout.

Product/Experimentation Design Questions

These questions test your ability to define success metrics, evaluate A/B test validity, and analyze product funnels, which are critical skills for any Adobe data scientist working on experimentation or growth teams.

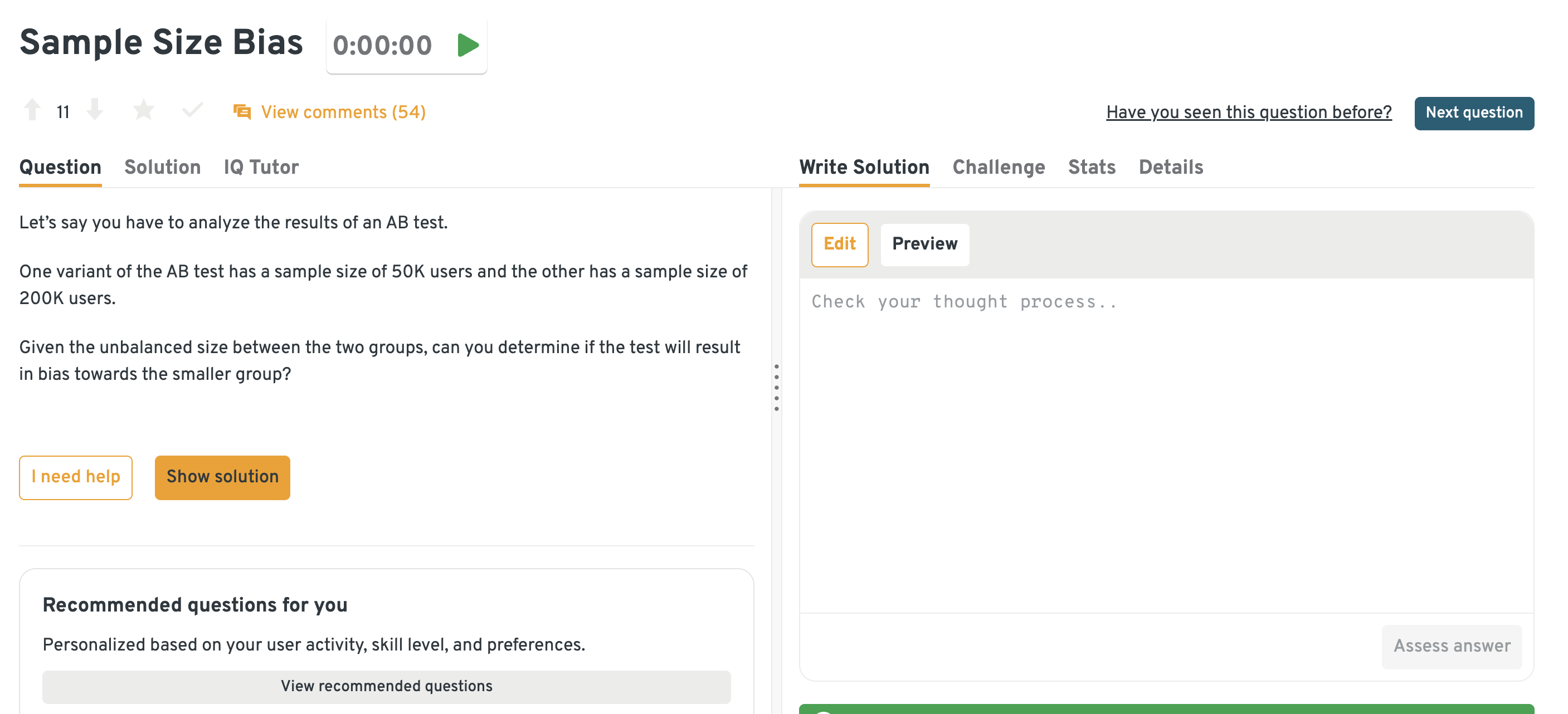

Determine if an unbalanced AB test with 50K and 5K users is valid

This question examines your knowledge of power, allocation ratios, and statistical significance in skewed test groups. Understand when unequal splits are acceptable and how to adjust your interpretation accordingly.

Tip: Unequal splits are fine if you adjust variance estimates; focus on whether the smaller arm has enough power to detect the minimum detectable effect.

With the Interview Query dashboard, you can attempt problems, write out your thought process, and immediately compare your solution against detailed explanations. The dashboard also recommends related questions based on your progress, helping you systematically strengthen weak areas. This guided structure ensures your prep is focused, efficient, and closer to what you’ll experience in real interviews.

Assess whether the significance of one out of twenty variants is valid

You’ll need to address multiple testing corrections, like Bonferroni or false discovery rate. This highlights your understanding of controlling for Type I error in multivariate experiments.

Tip: Don’t just name corrections. Explain tradeoffs (Bonferroni is conservative, FDR balances discovery vs false positives). Connect your choice to Adobe’s product context.

Design an A/B test to evaluate changes to a button

Define key metrics (CTR, conversion), determine randomization units, and set up a minimal test plan. Adobe’s product teams expect data scientists to drive experiment design from hypothesis to analysis.

Tip: Always include a guardrail metric (like bounce rate or latency) alongside success metrics; this shows you’re thinking holistically about user experience.

Assess the validity of a .04 p-value in an AB test

Challenge assumptions: was the experiment stopped early, were metrics pre-registered, is the lift meaningful? This scenario trains your judgment beyond just accepting p-values.

Tip: Tie statistical significance back to business impact. e.g., a p-value of .04 on a trivial 0.2% lift may not justify rollout.

Determine if bucket assignments in an A/B test were random

Discuss methods like covariate balance checks or chi-square tests across groups. Ensuring true randomization is crucial before trusting experiment outcomes.

Tip: Don’t just check demographics; test engagement metrics (DAU, spend) as well; imbalances here can invalidate results even if basic attributes are balanced.

Machine‑Learning Modeling Questions

These machine learning questions focus on modeling strategy, feature engineering, and bias mitigation, key skills for Adobe data scientists responsible for building fair and scalable models in production.

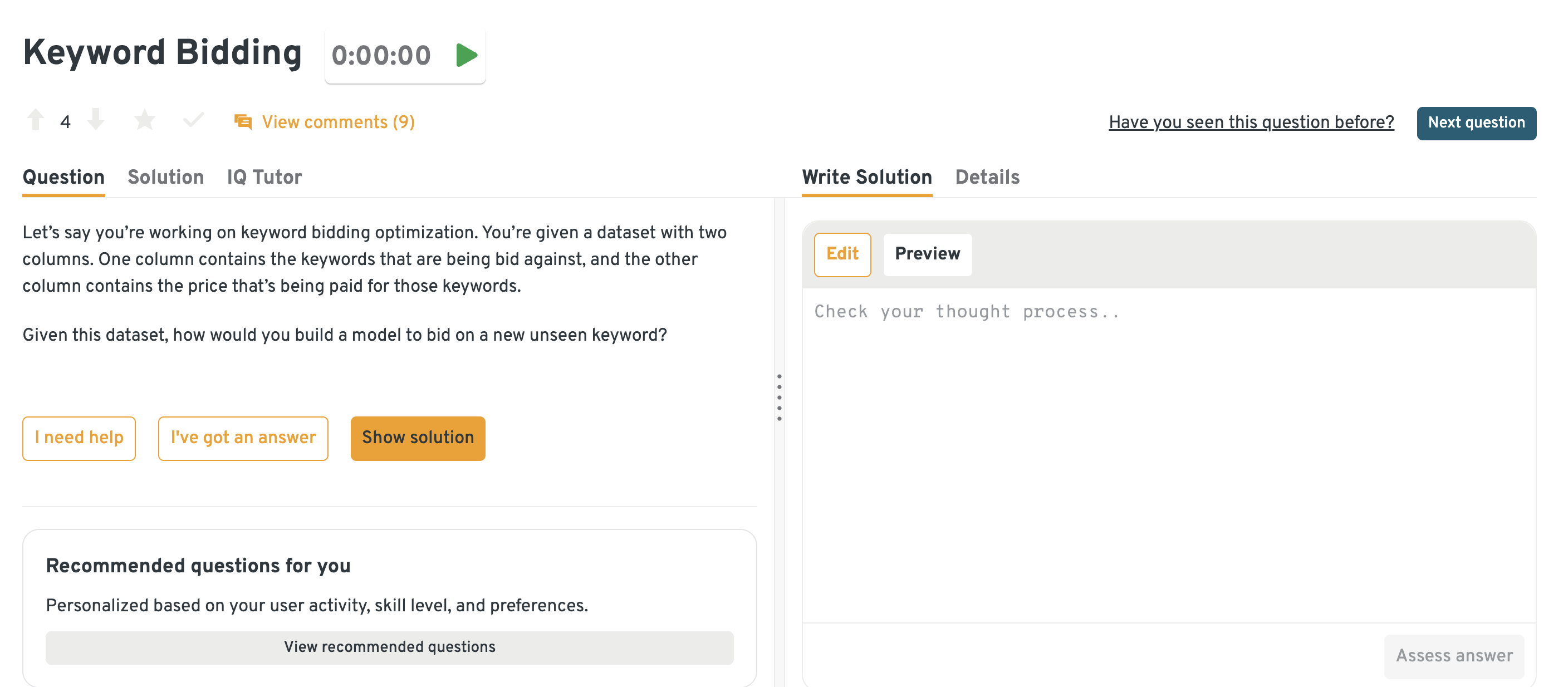

Build a model to bid on a new, unseen keyword using historical performance

Discuss regression vs classification framing, temporal leakage, and cold-start strategies. Feature engineering from historical CTR, CPC, and quality score is essential. This tests your ability to balance exploration and exploitation.

Tip: Explicitly call out how you’d prevent leakage (e.g., splitting by time, not randomly) to show real-world awareness.

You can solve this problem on Interview Query dashboard. This gives you an interactive workspace to practice real interview-style questions. It lets you write and preview your solution, check against model answers, and see recommended follow-up problems tailored to your skill level.

Determine if 1 million Seattle ride trips suffice to build a travel-time predictor

Evaluate sample representativeness, geographic coverage, and time-of-day variation. Explain how you’d diagnose variance and generalizability. This reflects judgment in dataset curation and data volume sufficiency.

Tip: Emphasize coverage over size. A balanced dataset with fewer records often beats millions of biased ones.

Build a fraud detection model and text messaging system for banks

Design a high-precision model with class imbalance handling, such as SMOTE or weighted loss. Discuss false positives and user trust trade-offs. Also, describe the messaging trigger threshold for real-time action.

Tip: Frame your answer around precision vs recall trade-offs. Fraud is rare, so show you’d tune thresholds to minimize user disruption.

Explain why measuring bias is important in food delivery times

Address regional disparities, demographic bias, or device-based unfairness. Present fairness metrics (e.g., equal opportunity) and interventions (e.g., counterfactual fairness). Bias evaluation is essential for responsible ML at Adobe.

Tip: Name at least one fairness metric and one mitigation strategy. Interviewers want to see that you can go from diagnosis to action.

Decide how to handle missing square footage data in housing price prediction

Evaluate imputation methods: mean/median, model-based, or indicator variables. Discuss the impact of missingness on downstream model interpretability. Handling missing data is foundational to robust ML pipelines.

Tip: Mention why the data is missing (random vs not random); demonstrating awareness of the missingness mechanism shows deeper statistical maturity.

Design a churn prediction model for Adobe Creative Cloud subscriptions

Frame the task as a supervised classification problem with labels defined by cancellations within a time window. Feature engineering might include usage frequency, product mix (e.g., Photoshop + Lightroom bundle), support tickets, and billing history. Address class imbalance and describe how you’d test whether your model improves retention strategies.

Tip: Always link churn modeling back to business levers. For example, how predictions would drive targeted retention campaigns or pricing experiments.

Behavioral & Culture‑Fit Questions

In an Adobe data scientist interview, expect behavioral prompts that probe how you navigate ambiguity, communicate technical insights, and collaborate across functions. Responses should follow the STAR format and reflect both your analytical rigor and your ability to work in Adobe’s inclusive, bottom-up culture.

One common behavioral prompt in an Adobe data scientist interview is: Tell me about a time when your analysis influenced a product decision.

Use the STAR format to highlight the business context, your analytical approach, and how your recommendation led to measurable changes. Adobe values scientists who can translate complex findings into actionable product strategy.

Example: I analyzed customer churn by subscription tier, found a 15% higher cancellation rate among monthly users, and recommended an annual-plan incentive. The product team implemented the change, reducing churn by 8%.

Tip: Always tie the story back to measurable business results. Adobe wants to see impact, not just analysis.

Describe a time when you had to explain a technical concept to a non-technical stakeholder.

Emphasize your ability to distill complexity, use analogies, and align your messaging to audience needs. Communication is key when working with design, product, and marketing teams.

Example: When explaining logistic regression output to marketing, I compared the coefficient size to “levers” they could pull in campaigns. This made the results intuitive and actionable.

Tip: Show adaptability. Pick analogies and visuals that meet your audience where they are.

Tell me about a time you faced conflicting priorities from different teams.

Walk through how you clarified goals, negotiated trade-offs, and kept the project moving forward. Adobe teams often operate with cross-functional tension, so navigating this gracefully is critical.

Example: Product wanted deeper personalization, but engineering pushed for a quick launch. I proposed a phased release: baseline personalization first, followed by a more advanced model in sprint two. Both sides aligned, and delivery stayed on schedule.

Tip: Emphasize how you created alignment rather than just compromise. Strong collaboration is about shared wins.

Give an example of a project where your initial hypothesis was wrong. What did you do?

Focus on intellectual humility and iterative thinking. Adobe appreciates scientists who value learning over ego and adapt their approach based on data.

Example: I expected longer trial periods to increase conversions, but analysis showed no lift. Instead of forcing the idea, I pivoted to studying onboarding steps, which uncovered friction points we later resolved.

Tip: Stress curiosity and flexibility. Adobe wants data scientists who learn quickly and adapt their approach.

Describe a situation where you had to clean or validate messy data before starting your analysis.

Explain how you detected anomalies, handled missing values, or flagged upstream data issues. Good science begins with good data.

Example: While analyzing user logs, I found duplicate IDs inflating engagement metrics. After reconciling with engineering, we patched the pipeline and re-ran metrics, leading to accurate reporting.

Tip: Highlight not only your technical fixes but also your collaboration with upstream teams. Data quality is a shared responsibility.

How to Prepare for a Data Scientist Role at Adobe

Study Adobe Product Analytics

Across an Adobe data scientist interview loop, you’ll encounter case studies rooted in real product metrics—like monthly active users, feature adoption, or customer churn. Explore Adobe’s earnings calls, blog posts, or product dashboards to understand how data supports decision-making across Creative Cloud and Experience Cloud.

Rehearse A/B Test Design

Be ready to explain how you’ve designed, launched, and analyzed experiments—from hypothesis to metric interpretation. Adobe values candidates who can clearly communicate tradeoffs, statistical rigor, and real-world constraints using the STAR method.

Practice SQL Joins + Window-Function

Brush up on SQL challenges involving user funnels, cohort retention, and rolling aggregates. Many interviews include writing queries live while discussing your logic out loud.

Build A Project Showcasing Generative-AI Metrics

Highlight your initiative by modeling performance metrics for image synthesis, prompt diversity, or ranking systems—especially if applying to Firefly or Sensei teams. Bonus if you visualize outcomes clearly for a non-technical audience.

Record Mock Interviews and Iterate

Simulate full interview loops and review your recordings to catch filler words, unclear logic, or missed opportunities. Iteration builds confidence—and helps polish both content and delivery.

FAQs

What Is the Average Salary for an Adobe Data Scientist?

Average Base Salary

Average Total Compensation

Glassdoor reports a total pay range of around $161,000–$232,000/year for data scientists at Adobe (all experience levels combined). Base pay averages around $148K, with additional bonus + stock pushing total higher.

- Entry/L2 data scientist: Total compensation around $181,000/year, typically includes ~$133K base, $34.6K stock, $13K bonus.

- Mid/L3 data scientist: Total compensation about $226,000/year, with ~$158,000 base, $49,750 in equity, and $18,500 bonus.

- Senior/L4 data scientist: At this level, compensation climbs to around $252,000/year with a base of ~$188,000 plus equity (~$43,000) and bonus (~$21,000).

- Start with

adobe data scientist salary. - Mid‑paragraph include

data scientist adobe salaryonce.

How do research scientist salaries compare?

Adobe data scientists in the U.S. have a total compensation range from around $181,000 (L2) up to about $359,000 for higher levels (L5). Median total comp is ≈ $235,000.

Adobe Research Scientists, in contrast, command significantly higher pay, especially at senior levels. Their salary packages tend to start much higher and go up to $585,000+, depending on level (base + equity + bonus). The median total compensation is around $329,000.

What does a Data Science intern earn at Adobe?

The Adobe Data Science intern salary typically ranges from $40 to $50 per hour, depending on education level and location. Interns also receive housing stipends, access to mentorship programs, and opportunities to present work to executive stakeholders, making it one of the more competitive internship programs in tech.

Is the Interview Different for Research Scientists?

Yes—while research scientists go through a similar loop, they face deeper technical interviews centered around model innovation, academic rigor, and originality. Expect questions about your published work, algorithmic novelty, and ability to translate cutting-edge research into production-ready frameworks. A research talk or deep-dive presentation is often required.

Is it hard to crack the Adobe data science interview?

Yes. Adobe’s interviews are considered challenging because they test both technical rigor (SQL, Python, ML modeling) and business judgment (product metrics, experiment design). Strong preparation in end-to-end case studies and A/B testing is essential.

Is it hard to become an Adobe data scientist?

Competition is high since Adobe attracts candidates with strong technical backgrounds. Beyond coding and modeling, successful candidates show they can influence product strategy with data and thrive in Adobe’s collaborative, innovation-driven culture.

Are data scientists still relevant?

Absolutely. Despite the rise of automated tools and LLMs, data scientists remain critical in designing experiments, validating causality, mitigating bias, and bridging technical insights with product strategy. Adobe still hires data scientists across product, marketing, and research teams. You can read more about being a data scientist in 2025.

What can I do differently during my Adobe interview?

Go beyond technical answers, and tie every solution back to business impact. For example, when solving a SQL funnel question, explain how the insight could improve conversion or retention. Adobe values scientists who think like product owners. You can practice using mock interviews with professionals in the data industry. This can help you stand out even under pressure.

What kind of questions are asked in a data science interview?

Expect a mix: SQL queries on large datasets, Python modeling tasks, A/B testing design, ML trade-off discussions, and behavioral prompts about collaboration and ambiguity. Adobe also emphasizes communication—be prepared to explain results to non-technical stakeholders.

You can practice these questions on Interview Query with multiple types of questions.

Which are the four main components of data science?

Most frameworks highlight: (1) Data collection & cleaning, (2) Statistical analysis & modeling, (3) Machine learning & algorithms, and (4) Communication & decision-making. Adobe expects proficiency across all four.

Conclusion

With targeted prep, Adobe data scientist interview questions become predictable. Whether you’re analyzing product metrics, designing experiments, or building ML models, knowing the format and expectations lets you focus your energy where it counts.

Ready to take action? Start with our Adobe Data Engineer and Adobe Software Engineer guides for curated questions, strategies, and walkthroughs.

Want an edge before your interview? Schedule a 1-on-1 mock loop with professionals and get tailored feedback to sharpen your prep.