50+ Essential Python Interview Questions for Data Analyst Roles (With Answers)

Introduction

Python is the heart of data analysis. Whether you’re cleaning spreadsheets, analyzing trends, or building dashboards, it’s the tool every data analyst needs to master.

Most companies big or small, use Python to make sense of data. Its clean syntax and powerful libraries like Pandas, NumPy, and Matplotlib make it easy to turn raw numbers into insights.

In interviews, though, it’s not just about writing code. Recruiters want to see how you think with Python. Can you solve a messy data problem? Automate a task? Explain what your code means in business terms? That’s what separates a good analyst from a great one.

In this guide, we’ll walk through 50+ Python interview questions you’re likely to face, from basics to real-world coding challenges. You’ll also learn how to talk about your projects, communicate your thought process, and avoid common mistakes.

By the end, you’ll know exactly what to expect and how to show that you’re ready to use Python like a pro to ace your next data analyst interview.

Interview Structure: What to Expect in Data Analyst Interviews

Every data analyst interview follows a similar flow, with a mix of technical questions, real-world scenarios, and communication skills. But depending on the company, it might happen in a single sitting or spread across multiple rounds.

If you’re just starting out, a data analyst learning path can help you focus on the key skills such as Python, SQL, statistics, and business communication, to prepare for every interview stage.

Let’s break down what each stage looks like and what interviewers are really testing for.

The Initial Screening

Think of this as your warm-up round. It’s usually a short phone or Zoom chat with a recruiter or HR representative. They’ll ask about your background, what you’re looking for, and why you’re interested in the role.

You won’t get deep technical questions here, but be prepared to talk about your experience with tools like Python, SQL, and Excel in simple terms. A good approach is to share one or two quick examples of how you’ve used data to solve real problems like:

“In my last project, I used Python and Pandas to clean customer survey data and visualize response trends. The insights helped identify the top three pain points, which improved product satisfaction scores by 10%.”

That’s all you need, one quick example that shows both skill and impact. Think of this round as your elevator pitch and keep your tone friendly and confident. This round is about showing enthusiasm and communication skills as much as it is about your technical foundation.

The Technical Round

This is where the fun begins, and your Python and data skills take center stage. You might face:

- Live coding questions on shared screens

- Short take-home challenges

- Concept-based questions on Pandas, NumPy, or data structures

Expect problems like cleaning a dataset, calculating averages, or transforming data for analysis. Interviewers aren’t just checking if your code runs, they want to see your thought process.

Remember that they’re not just watching your code, they’re listening to your logic. Narrate your process as you go:

“I’ll start by checking for missing values using df.isnull().sum(). If a column has too many nulls, I’d drop it, otherwise, I’d fill with the median. Once it’s clean, I’ll group by region and calculate average sales with groupby() to compare performance.”

If something goes wrong, don’t freeze. Talk it out:

“Looks like the grouping didn’t work because of inconsistent region names. I’d check for trailing spaces with df['region'].unique() and clean them using str.strip().”

That kind of thinking aloud shows confidence and collaboration and it’s exactly what interviewers want to see.

The Case or Business Round

Now you’ll be tested on how you think with data. The interviewer might hand you a dataset or describe a scenario, something like:

“Our user engagement dropped by 20% last quarter. How would you analyze this problem?”

Here, your goal isn’t to write perfect code but to explain your analytical reasoning. Start by clarifying the problem. Ask what engagement means here like clicks, time spent, or sessions? Then outline your steps:

Define metrics: “I’d start by defining engagement metrics like daily active users, session time, or feature usage.”

Explore the data: “I’d use SQL to pull user activity by date, region, and platform. In Pandas, I’d visualize trends over time using line charts to see when the drop began.”

Compare segments: “In case mobile users dropped more than desktop, I’d drill deeper. Maybe an app update caused performance issues, so I’d check release logs or feedback data.”

Handle missing or inconsistent data: “If the dataset is incomplete, I’d flag it and run checks on data integrity before drawing conclusions.”

Connect to action: “If the analysis shows engagement fell after an app update, I’d recommend A/B testing a previous version or rolling back specific changes to confirm the cause.”

This round shows whether you can connect analysis to impact, which is the real skill every company values.

The Behavioral Round

Even the best technical analysts need strong soft skills. In this round, interviewers look for how you collaborate, handle feedback, communicate insights and test your problem-solving skills under pressure.

Expect questions like:

- “Tell me about a time you solved a tough data problem.”

- “How do you manage tight deadlines with messy data?”

- “Describe a time you worked with a non-technical stakeholder.”

This is your chance to tell short, specific stories. For example:

“In one project, a marketing manager wanted to measure campaign success using total clicks. I explained why conversion rate was a more accurate metric since it normalized by audience size. I visualized both metrics side-by-side, and once they saw the difference, we shifted focus to conversions. That change helped refine future campaigns.”

Keep your answers short and structured using the STAR method (Situation, Task, Action, Result). This is your chance to show your personality, so talk about teamwork, challenges, and what you learned along the way.

Interviewers want to see that you can explain insights clearly, handle feedback gracefully, and keep projects moving even when things get messy.

Remote and Hybrid Interviews

Virtual interviews have become the standard for data roles, especially since many analyst teams now operate in hybrid or fully remote setups. While the technical questions stay the same, the experience feels different, and small details can make or break your impression.

Before the interview, check your internet connection, camera, and microphone, and make sure your surroundings are quiet and distraction-free. Have all your tools like Jupyter notebooks, datasets, or coding environments open and ready to go so you can jump right in.

During the interview, communicate clearly. Narrate your thought process as you work so the interviewer can follow your reasoning. For example:

“I’m checking for null values before merging these tables to prevent mismatches. Once I know which columns have missing data, I’ll decide whether to drop or impute them. After cleaning, I’ll run a quick validation to confirm the merge didn’t create duplicate records.”

That kind of running commentary helps the interviewer understand your logic and keeps them engaged.

Ask clarifying questions before solving anything to show you’re methodical. If something breaks, explain what you’re testing or verifying instead of going silent. These small habits like staying calm, explaining your reasoning, and showing situational awareness, helps you appear confident, collaborative, and in control, even through a screen.

Core Python Interview Questions for Data Analyst Roles

Every data analyst interview starts with the basics. These questions test how well you understand Python’s core concepts like data types, loops, and functions. Even if you’ve used libraries like Pandas or NumPy, interviewers want to know you’re solid on the fundamentals.

Tip: Keep answers short and structured. Try to add a quick example or explain how you’ve used the concept in a past project.

Read more: Python Interview Questions for Data Engineers

Here are some common Python questions and how to approach them along with some code snippets to help you solve each one:

1 . Find words not in both strings

This question tests your ability to use sets and string operations in Python. It’s designed to see if you can manipulate text and identify unique elements efficiently. You can solve it by splitting both strings into words, converting them to lowercase, and using the symmetric difference of two sets to find non-overlapping words.

def mismatched_words(str1, str2):

return list(set(str1.lower().split()) ^ set(str2.lower().split()))

Tip: Mention that sets are ideal for comparing data since they make the process faster and simpler than using loops.

2 . Determine whether there exists a permutation of an input string that is a palindrome.

This problem checks your understanding of frequency counting and logic conditions. It’s used to test whether you can apply mathematical reasoning to string data. Count each character’s frequency and confirm that no more than one character has an odd count, only then can a palindrome permutation exist.

from collections import Counter

def perm_palindrome(s):

return sum(v % 2 for v in Counter(s).values()) <= 1

Tip: Walk through an example during your explanation to show you understand the logic behind the condition, not just the syntax.

3 . Reconstruct the path of a trip so that the trip tickets are in order.

This task tests your ability to organize and link data using mappings. It evaluates whether you can reconstruct an ordered chain from unordered relationships. Create a dictionary mapping each departure to its destination, find the starting point, and iteratively build the path until no more destinations exist.

def plan_trip(flights):

route = {a: b for a, b in flights}

start = (set(route.keys()) - set(route.values())).pop()

path = []

while start in route:

path.append([start, route[start]])

start = route[start]

return path

Tip: Relate this type of problem to real-world data work such as ordering time-series or user journey information.

This question measures your skills in iteration and aggregation using dictionaries. It tests if you can pair elements across lists and compute group totals efficiently. Use Counter() or a simple dictionary to sum all tips by user and return the one with the highest value.

from collections import Counter

def most_tips(user_ids, tips):

total = Counter()

for u, t in zip(user_ids, tips):

total[u] += t

return total.most_common(1)[0][0]

Tip: Explain how you could expand this logic to handle large datasets or missing values to demonstrate practical thinking.

This problem tests your ability to apply statistical computation manually. It checks if you can code standard deviation from scratch using core Python. Calculate the mean, then the variance, and finally the square root of variance to get the standard deviation.

from math import sqrt

def compute_deviation(data):

return {d['key']: round(sqrt(sum((x - sum(d['values'])/len(d['values']))**2 for x in d['values'])/len(d['values'])), 2)

for d in data}

Tip: Clarify that you are applying the population standard deviation formula unless the problem states otherwise to show precision.

6 . Write a function that counts all n-grams in a string and returns their frequency as a dictionary.

This question focuses on looping and frequency tracking. It’s meant to test if you understand substring manipulation and dictionary counting. Slide a window of length n across the string and increment the count for each n-gram in a dictionary.

def ngram_count(word, n):

return {word[i:i+n]: word[i:i+n:].count(word[i:i+n]) for i in range(len(word)-n+1)}

Tip: Discuss potential edge cases like when n equals the string length to show attention to detail.

7 . Swap the values of keys ‘a’ and ‘b’ in a dictionary without using any extra variable.

This tests your grasp of Python tuple unpacking and in-place operations. It evaluates whether you can update values concisely and safely. Use: d['a'], d['b'] = d['b'], d['a'] to swap the two values without creating a new variable.

def swap_values(d):

d['a'], d['b'] = d['b'], d['a']

return d

Tip: Point out that using tuple unpacking is considered Pythonic and reflects efficient and clean coding practice.

8 . Find the user who tipped the most from two lists: one of user_ids and one of tips.

This repeats the same aggregation logic and checks for iteration and value comparison. Combine both lists using zip(), track totals in a dictionary, and find the user with the maximum tip sum.

def top_tipper(users, tips):

totals = {}

for u, t in zip(users, tips):

totals[u] = totals.get(u, 0) + t

return max(totals, key=totals.get)

Tip: Reinforce that this approach can scale effectively for grouped data, which is a key skill in analytics.

9 . Write a function to check if a given string is a palindrome.

This question tests your ability to manipulate and compare strings. It ensures you can perform simple checks cleanly. Compare the string to its reverse (s == s[::-1]) to determine if it reads the same both ways.

def is_palindrome(s):

return s == s[::-1]

Tip: Emphasize that simple and readable solutions often stand out more than overly complex code.

This problem assesses your understanding of sorting and list indexing. It checks whether you can navigate nested lists and extract ranked values. Sort each sublist in descending order, select the fifth element, and collect the results before sorting them again.

def list_fifths(numlists):

return sorted([sorted(lst, reverse=True)[4] for lst in numlists])

Tip: Note that sorting has a time complexity of O(n log n) to demonstrate awareness of algorithm efficiency.

11 . Write a function that returns the maximum number in a list, or None if the list is empty.

This checks your grasp of conditional handling and list traversal. It’s used to test how safely you write code that handles empty inputs. You can simply use `return max(nums) if nums else None`.

def max_num(nums):

return max(nums) if nums else None

Tip: Explain why input validation is essential since real-world data can contain empty or missing values.

This question tests indexing and slicing comprehension. It evaluates whether you understand Python’s inclusive/exclusive range behavior. Use slicing, sum(vehicles[start:end]) to compute totals between checkpoints.

def range_vehicles(vehicles, start, end):

return sum(vehicles[start:end])

Tip: Be clear about how Python’s end index in slicing is exclusive to highlight your precision.

13 . Write a function that sums all the digits in a floating-point number represented as a string.

This one tests string filtering and numeric type conversion. It checks if you can iterate through a string, detect digits, and sum them correctly. Convert characters to integers when char.isdigit() is True.

def digit_accumulator(s):

return sum(int(c) for c in s if c.isdigit())

Tip: Mention that this logic also works when the string includes non-digit characters, showing robustness.

This reinforces your ability to combine iteration, dictionary comprehension, and math logic. It’s about summarizing multiple groups with statistical metrics. Compute deviation for each key using a helper function that calculates mean and variance manually.

from math import sqrt

def std_map(data):

def std(v):

m = sum(v)/len(v)

return round(sqrt(sum((x-m)**2 for x in v)/len(v)), 2)

return {d['key']: std(d['values']) for d in data}

Tip: If you have used Pandas functions like df.std(), connect your logic to those real-world methods for context.

This problem tests frequency analysis and conditional logic. It checks whether you can count occurrences while excluding specific characters. Use a Counter to track counts and rebuild the string, skipping spaces and discarded characters.

from collections import Counter

def append_frequency(sentence, discard_list):

c = Counter(ch for ch in sentence if ch != " " and ch not in discard_list)

return "".join(ch + str(c[ch]) if ch in c else ch for ch in sentence if ch != " ")

Tip: Highlight that this problem mirrors text preprocessing steps, linking it to practical NLP or data cleaning scenarios.

Data Analyst Interview Questions on Python Libraries: Pandas, NumPy, Matplotlib, and Scikit-learn

Once you’ve covered the basics, interviewers often test how well you know Python’s key data libraries, especially Pandas and NumPy. These tools form the foundation of nearly every data analyst workflow.

Pandas is used for working with structured data. NumPy powers fast numerical operations. Other common libraries include Matplotlib or Seaborn for visualization, and scikit-learn for applying basic machine learning models in analytics settings.

In interviews, you might be asked how to merge and reshape datasets with Pandas, perform calculations with NumPy arrays and visualize trends or correlations in data

Tip: Show you can connect these libraries together, like cleaning data with Pandas, analyzing it with NumPy, and visualizing insights with Matplotlib. It demonstrates real-world problem-solving, not just tool knowledge.

Read more: How to Prepare for a Data Analyst Interview

1 . Build a random forest model from scratch with specific conditions

This question tests your understanding of machine learning fundamentals and how decision trees combine in ensemble methods. It evaluates whether you can simulate the logic of a random forest without using Scikit-learn.

To solve it, use Pandas and NumPy for data handling. Iterate through permutations of feature splits and track majority votes for a target variable. Each “tree” in the forest evaluates subsets of features, and the final prediction is made through majority voting.

import pandas as pd

from itertools import permutations

def random_forest(new_point, data):

features = data.columns[:-1]

votes = []

for perm in permutations(features):

X = data.copy()

for col, val in zip(perm, new_point):

X = X[X[col] == val].drop(columns=col)

if not X.empty and not X['Target'].mode().empty:

votes.append(X['Target'].mode()[0])

return int(pd.Series(votes).mode()[0])

Tip: Focus on explaining how random forests reduce overfitting by combining multiple tree predictions instead of relying on one.

This problem tests your knowledge of Markov chains and transition probabilities. It evaluates how well you can use NumPy to model probability transitions over time.

The approach involves representing different rain states (e.g., Rain–Rain, Rain–No Rain) in a transition matrix. You then raise this matrix to the nth power using numpy.linalg.matrix_power() to determine how probabilities evolve with each day. Finally, compute the total probability of rain on day n based on transitions from the initial state.

import numpy as np

from numpy.linalg import matrix_power

def rain_days(n):

P = np.array([[0.2, 0.8, 0, 0],

[0, 0, 0.6, 0.4],

[0.6, 0.4, 0, 0],

[0, 0, 0.2, 0.8]])

Pn = matrix_power(P, n)

return Pn[0,0] + Pn[0,2]

Tip: Explain how transition matrices help predict future outcomes based only on current conditions which is a key concept in probabilistic modeling.

3 . Implement the k-means clustering algorithm in Python from scratch

This question evaluates your understanding of unsupervised learning and numerical optimization. It tests whether you can implement clustering logic using NumPy’s array operations.

To solve it, randomly initialize k centroids, assign each data point to the nearest centroid using Euclidean distance, then recompute centroids as the mean of their assigned points. Repeat the assignment and update steps until the centroids stabilize (no further change). Use NumPy for vectorized distance calculations and mean updates for efficiency.

import numpy as np

def kmeans(X, k, iterations=100):

centroids = X[np.random.choice(X.shape[0], k, replace=False)]

for _ in range(iterations):

clusters = [np.argmin(np.linalg.norm(x-centroids, axis=1)) for x in X]

new_centroids = np.array([X[np.array(clusters)==i].mean(axis=0) for i in range(k)])

if np.allclose(centroids, new_centroids):

break

centroids = new_centroids

return centroids

Tip: Mention how k-means can be sensitive to initialization and that multiple runs help achieve better clustering stability.

4 . Find the median rainfall for days on which it rained in a given dataframe.

This question tests your data filtering and aggregation skills with Pandas. It checks if you can isolate relevant rows and compute statistical summaries.

Filter the dataframe to include only days where rainfall is greater than zero, then use the median() function to calculate the median rainfall amount. This demonstrates your ability to quickly analyze conditional subsets of data using Pandas.

def median_rainfall(df_rain):

return df_rain.loc[df_rain['rainfall'] > 0, 'rainfall'].median()

Tip: Emphasize that data filtering and aggregation are fundamental for quick exploratory analysis and business insights.

5 . Impute missing cheese prices in a dataframe with the median value of non-missing entries.

This question focuses on data cleaning and imputation techniques using Pandas. It tests whether you know how to handle missing values effectively.

To solve it, calculate the median of all available prices using median() and replace missing ones with this value via fillna(). This ensures numerical consistency while avoiding bias introduced by dropping missing rows.

def cheese_median(df_cheeses):

median_price = df_cheeses['price'].median()

df_cheeses['price'] = df_cheeses['price'].fillna(median_price)

return df_cheeses

Tip: Show you understand how median imputation preserves distribution shape better than mean imputation for skewed data.

This problem tests your grouping and aggregation skills in Pandas. It checks how well you summarize detailed transactional data into meaningful features.

Group the data by customer ID and gender, then use agg() to calculate the most recent sale (max() on date column) and total purchases (count() on sale ID). The resulting dataframe becomes a high-level customer summary useful for analytics dashboards.

def customer_summary(df):

return (df.groupby(['customer_id', 'gender'])

.agg(most_recent_sale=('date_of_sale', 'max'),

order_count=('date_of_sale', 'count'))

.reset_index())

Tip: Point out that summarizing data this way is common in reporting pipelines and customer segmentation tasks.

This question tests your understanding of data sampling and stratification. It evaluates whether you can manually perform a stratified split without using external libraries.

To solve it, use Pandas filtering to separate “yes” and “no” classes, sample proportionally from each class to form a training set, and compute how many belong to the “no” group. Use the remaining rows as the test set. This ensures balanced representation of target classes in the training data.

def stratified_split(df, col, frac=0.8):

train = pd.concat([

df[df[col] == 'yes'].sample(frac=frac, random_state=42),

df[df[col] == 'no'].sample(frac=frac, random_state=42)

])

return len(train[train[col] == 'no'])

Tip: Emphasize that stratification helps prevent model bias when class distributions are imbalanced.

This question assesses your data joining and relational thinking using Pandas. It evaluates whether you can use joins to enrich incomplete datasets.

Perform a merge() between the two dataframes on the city column to attach state information where missing. Use how='left' to preserve all rows from the address table and fill gaps with matched city–state pairs.

def complete_addresses(df_addresses, df_cities):

return df_addresses.merge(df_cities, on='city', how='left')

Tip: Explain how left joins are key in analytics pipelines to enrich or validate data without losing observations.

9 . Generate a random sample from a standard normal distribution.

This question checks your knowledge of random sampling and probability distributions using NumPy. It tests if you can produce statistically meaningful random samples.

Use np.random.randn() to generate samples from a normal distribution with mean 0 and standard deviation 1. You can specify the sample size as a parameter.

import numpy as np

def normal_sample(n):

return np.random.randn(n)

**Tip:** Note that understanding sampling methods is critical for simulations, bootstrapping, and A/B testing in analytics.

10 . Transform a dataframe of user IDs and full names into one containing only the first names.

This problem tests your ability to manipulate string data within Pandas columns. It evaluates whether you can efficiently extract and clean data.

Apply the str.split() method on the full name column and use .str[0] to retrieve only the first names. Combine this with the user ID column to return a cleaned dataframe.

def first_names_only(df):

df['first_name'] = df['full_name'].str.split().str[0]

return df[['user_id', 'first_name']]

Tip: Mention that vectorized string methods in Pandas are faster and cleaner than using manual loops for text operations.

11 . Pivot and reshape a dataframe to summarize total sales by region and quarter.

This question tests your data reshaping and summarization skills in Pandas. It evaluates whether you can use pivot tables to transform long-format data into an easy-to-analyze summary.

Use pivot_table() to aggregate sales data by region and quarter, applying sum as the aggregation function. This shows your ability to summarize and reorganize datasets effectively.

def sales_pivot(df):

return df.pivot_table(values='sales', index='region', columns='quarter', aggfunc='sum')

Tip: Knowing how to reshape data is key for exploratory analysis and reporting workflows.

12 . Compute column-wise means and normalize an array using NumPy broadcasting.

This problem checks your understanding of NumPy vectorization and broadcasting rules. It tests if you can perform arithmetic operations efficiently across arrays of different shapes.

Use array.mean(axis=0) to compute column means, then subtract these from each column using broadcasting. This eliminates loops and leverages NumPy’s speed advantage for large-scale numerical data.

import numpy as np

def normalize_array(arr):

col_means = arr.mean(axis=0)

return arr - col_means

Tip: Explain how vectorization avoids explicit loops, making operations on large datasets much faster.

13 . Visualize the correlation between numerical variables using a heatmap.

This question tests your skills in data visualization and correlation analysis. It checks whether you can communicate insights using Seaborn and Matplotlib.

Use Pandas’ corr() to compute correlation between numeric columns, then visualize it with Seaborn’s heatmap() for a quick overview of relationships between variables.

import seaborn as sns

import matplotlib.pyplot as plt

def plot_correlation(df):

corr = df.corr()

sns.heatmap(corr, annot=True, cmap='coolwarm')

plt.show()

Tip: Mention that visualizations help uncover trends and relationships that aren’t immediately visible through raw numbers.

14 . Standardize numerical features in a dataset using Scikit-learn.

This problem evaluates your familiarity with data preprocessing using Scikit-learn. It tests if you can prepare data for machine learning models.

Use StandardScaler from sklearn.preprocessing to scale numerical features so they have zero mean and unit variance, a common preprocessing step before training models.

from sklearn.preprocessing import StandardScaler

def scale_features(df):

scaler = StandardScaler()

df_scaled = scaler.fit_transform(df)

return df_scaled

Tip: Emphasize that scaling improves model convergence and comparability between features of different magnitudes.

15 . Plot a histogram to visualize the distribution of sales across regions.

This question tests your ability to visualize and interpret data distributions using Matplotlib. It’s meant to see if you can convert raw numerical data into insights through visual storytelling.

Use plt.hist() to create a histogram of sales data, customizing bins and labels for readability. This helps communicate whether data is skewed, uniform, or normally distributed.

import matplotlib.pyplot as plt

def plot_sales_distribution(df):

plt.hist(df['sales'], bins=10, color='skyblue', edgecolor='black')

plt.title('Sales Distribution by Region')

plt.xlabel('Sales')

plt.ylabel('Frequency')

plt.show()

Tip: Highlight that visualizing distributions is one of the most effective ways to detect anomalies, outliers, or skewness.

Coding Challenges: Real-World Data Analysis Scenarios

This is where interviews get hands-on. Instead of testing theory, you’ll solve problems that reflect real data analyst workflows such as cleaning messy data, transforming formats, analyzing trends, and presenting insights. These questions test your Python fluency, logic, and data intuition. The goal isn’t just to get the right answer but to demonstrate structured, efficient thinking.

Tip: Narrate your reasoning while coding. Walk through your assumptions, outline your steps, and explain why your approach makes sense. Interviewers value clarity as much as correctness.

Read more: SQL Interview Questions for Data Analysts

1 . Write a function that reads a CSV file into a pandas DataFrame, removes outliers based on z-score, and returns the cleaned dataset.

This question tests your data preprocessing and anomaly detection skills. It evaluates whether you can detect and remove extreme values that skew analysis. To solve it, read the CSV file using pandas.read_csv(), compute the z-score for numeric columns using scipy.stats.zscore, and filter out rows where any value’s z-score exceeds a threshold (typically ±3). This results in a cleaner dataset suitable for analysis.

import pandas as pd

from scipy import stats

def remove_outliers(file_path):

df = pd.read_csv(file_path)

df = df[(abs(stats.zscore(df.select_dtypes(include='number'))) < 3).all(axis=1)]

return df

Tip: Outlier removal is a critical step in ensuring that extreme values don’t distort averages or regression models.

This question tests your ability to clean and fill missing values using Pandas. It evaluates whether you can choose the right imputation method for skewed numeric data. To solve it, compute the median value for non-missing entries in the price column, then use fillna() to replace the missing prices. This approach ensures that missing values don’t bias further calculations.

def cheese_median(df):

price_median = df.Price.median()

df.Price = df.Price.fillna(price_median)

return df

Tip: Median imputation is robust against outliers and preserves data integrity better than mean imputation in most datasets.

3 . Transform a dataframe to contain only user ids and first names.

This question tests your data transformation and string manipulation skills in Pandas. It evaluates whether you can reshape text-based columns cleanly. Use Pandas string methods to split the full name column and retain only the first token as the first name. Return a new dataframe with user IDs and first names.

def first_name_only(users_df):

users_df['first_name'] = users_df['name'].str.split(' ').str[0]

return users_df[['user_id', 'first_name']]

Tip: This demonstrates your ability to clean user data, which is essential in standardizing names or merging records.

This question tests your data normalization and mathematical reasoning. It checks whether you can rescale data to improve comparability. Compute the minimum and maximum grades, then apply the normalization formula (x - min) / (max - min) to each grade using list comprehension. Return a new list of tuples with normalized values.

def normalize_grades(data):

grades = [g for _, g in data]

min_g, max_g = min(grades), max(grades)

return [(n, (g - min_g) / (max_g - min_g)) for n, g in data]

Tip: Normalizing values is a crucial preprocessing step when comparing variables on different scales or preparing data for ML models.

5 . Interpolate missing temperature data in a time-series dataframe using linear interpolation.

This question checks your ability to handle missing time-series data. It tests whether you understand temporal relationships in continuous variables. Use Pandas’ groupby() to separate cities, then apply interpolate(method='linear') on the temperature column. This fills in gaps based on surrounding observations without distorting time order.

def interpolate_temps(df):

df['temperature'] = df.groupby('city')['temperature'].apply(lambda x: x.interpolate())

return df

Tip: Time-series interpolation is essential for accurate forecasting when data collection is incomplete or irregular.

6 . Write a Python function to calculate month-over-month sales growth from a sales dataset.

This question evaluates your data analysis and trend calculation skills. It checks whether you can compare metrics over time to identify performance changes. Group sales data by month, compute total revenue per month, and use pct_change() to calculate the percentage growth from one month to the next.

def monthly_sales_growth(df):

df_month = df.groupby('month')['sales'].sum().reset_index()

df_month['growth'] = df_month['sales'].pct_change().fillna(0)

return df_month

Tip: Calculating growth rates helps translate raw sales data into actionable business insights.

7 . Write a function to merge and deduplicate customer records from multiple sources.

This question tests your data integration and deduplication skills. It evaluates how you merge overlapping data sources cleanly. Use pd.concat() to combine multiple dataframes, then apply drop_duplicates(subset='customer_id') to remove redundant entries while preserving the latest record.

def merge_customers(df_list):

merged = pd.concat(df_list)

return merged.drop_duplicates(subset='customer_id', keep='last')

Tip: Deduplication is crucial in analytics pipelines to prevent inflated metrics or duplicate reporting.

8 . Identify and remove duplicate rows in a dataset while keeping the first occurrence.

This question assesses your data cleaning and quality assurance knowledge. It’s designed to test your comfort with Pandas’ built-in data correction tools. Apply drop_duplicates() to eliminate duplicate entries, keeping only the first occurrence. This operation ensures your dataset is accurate and unique per record.

def clean_duplicates(df):

return df.drop_duplicates(keep='first')

Tip: Duplicates can distort statistics or double-count results, so detecting and cleaning them is vital in all data workflows.

9 . Write a function to calculate the correlation between numerical features in a dataset and plot the top correlated pairs.

This question tests your ability to analyze relationships between variables using Pandas and visualization. Compute pairwise correlations using df.corr(), unstack the matrix, sort by absolute value, and plot the top correlated variable pairs using Matplotlib or Seaborn.

import seaborn as sns

import matplotlib.pyplot as plt

def top_correlations(df, n=5):

corr = df.corr().abs().unstack().sort_values(ascending=False)

top_pairs = corr[corr < 1].head(n)

sns.barplot(x=top_pairs.values, y=[f"{i[0]} & {i[1]}" for i in top_pairs.index])

plt.show()

Tip: Correlation analysis reveals key patterns like feature redundancy or business drivers, which are essential in data storytelling.

10 . Write a function to detect missing values, summarize their count and percentage, and return a clean report.

This question evaluates your data profiling and exploratory analysis ability. It checks whether you can diagnose missing data before deciding how to handle it. Use isnull().sum() to count missing values per column, divide by total rows to get percentages, and present the results in a summary DataFrame.

def missing_report(df):

missing = df.isnull().sum()

percent = (missing / len(df)) * 100

return pd.DataFrame({'missing_count': missing, 'missing_%': percent})

Tip: Before imputing or dropping data, always understand where and why values are missing — it can reveal collection or entry issues.

Watch Next: Top 5 Insider Interview Questions Data Analysts Must Master Before Any Interview!

In this video, Jay Feng, co-founder of Interview Query, dives into five of the most frequently asked data analyst interview questions, from handling over-connected users who post less to simulating truncated distributions. You’ll see how to break down each prompt, choose the right methods, implement clear Python solutions, and tie your answer back to the business context for maximum impact.

Advanced Python Interview Questions: Statistics, Algorithms, and Business Impact

Once you’ve cleared the basics, interviews often dive deeper to test how you use Python for analysis that drives real decisions. These questions connect technical skills with statistical reasoning and business understanding.

Expect topics like:

- Statistical analysis: Mean, median, variance, correlation, hypothesis testing

- Algorithms: Sorting, searching, and data transformation techniques

- Data visualization: Using Matplotlib, Seaborn, or Plotly to explain insights

- Business context: Translating results into recommendations or actionable insights

You might analyze a dataset to find key metrics, build a simple regression model, or explain how a Python script supports business goals like increasing sales or reducing churn.

Tip: Focus on explaining the why behind your analysis, not just the code. Interviewers look for analysts who can connect Python results to real business impact.

This question evaluates your data interpretation and hypothesis testing skills in a business context. It checks how you’d diagnose a behavioral trend and propose data-driven solutions.

Start by analyzing correlations between number of friends and posting frequency. Control for confounding variables like time spent on the platform or content type. Then, segment users to see if high friend counts lead to lower engagement or just a shift in behavior (e.g., liking/commenting more). Use regression or causal inference techniques to isolate effects and suggest interventions, like improving content discovery for highly connected users.

Tip: Focus on identifying whether the correlation implies causation, and propose actionable steps that could improve user engagement.

This problem tests your statistical quality control and data validation thinking. It examines how you’d troubleshoot measurement or process variation.

Begin by collecting historical data on box weights and counts. Use control charts or standard deviation analysis to determine if the variation is random or systematic. If the error is consistent, investigate calibration or machine logic. If random, consider sampling errors or faulty sensors. Use Python’s pandas and matplotlib to visualize deviations and summarize metrics.

import pandas as pd

import matplotlib.pyplot as plt

def check_packet_variance(df):

df['deviation'] = df['packets'] - 25

plt.hist(df['deviation'], bins=10)

plt.title('Packet Count Deviations')

plt.show()

return df['deviation'].describe()

Tip: Combine statistical insight with practical business reasoning — you’re not just debugging, you’re ensuring consistent product quality.

3 . How would you test whether certain survey responses were filled randomly rather than truthfully?

This question tests your understanding of statistical hypothesis testing and data integrity checks. Compare observed response distributions to expected ones using a Chi-square goodness-of-fit test. Random answers often appear uniformly distributed, while genuine responses cluster around logical options. Use Python’s scipy.stats.chisquare() to test for uniformity.

from scipy.stats import chisquare

import numpy as np

def test_randomness(responses):

observed = np.bincount(responses)

expected = np.ones_like(observed) * np.mean(observed)

stat, p_value = chisquare(observed, expected)

return p_value

Tip: Explain that detecting random responses ensures survey reliability and helps filter out invalid data before analysis.

4 . Write a function to return all prime numbers from an array of integers.

This question evaluates your understanding of algorithmic problem-solving and efficiency. Use a helper function to check primality by verifying divisibility up to the square root of each number. Then apply list comprehension to filter prime values.

import math

def get_primes(arr):

def is_prime(n):

if n < 2: return False

for i in range(2, int(math.sqrt(n)) + 1):

if n % i == 0: return False

return True

return [x for x in arr if is_prime(x)]

Tip: Highlight time complexity, like this approach runs in O(n√n), which is efficient enough for small datasets but can be optimized using the Sieve of Eratosthenes for larger inputs.

5 . Write a function to rotate a matrix by 90 degrees clockwise.

This question checks your 2D array manipulation and algorithmic thinking. To rotate a matrix 90° clockwise, first transpose it (swap rows and columns) using zip(*matrix), then reverse each row.

def rotate_matrix(matrix):

return [list(row)[::-1] for row in zip(*matrix)]

Tip: Mention that understanding matrix transformations is foundational for graphics, data grids, and image processing algorithms.

This question tests your ability to compare algorithmic efficiency across implementations. The recursive method is elegant but inefficient (O(2ⁿ)); the iterative method is linear; memoization improves recursion by storing computed results.

# Recursive

def fib_rec(n):

return n if n < 2 else fib_rec(n-1) + fib_rec(n-2)

# Iterative

def fib_iter(n):

a, b = 0, 1

for _ in range(n):

a, b = b, a + b

return a

# Memoized

from functools import lru_cache

@lru_cache(maxsize=None)

def fib_memo(n):

return n if n < 2 else fib_memo(n-1) + fib_memo(n-2)

Tip: When asked to compare methods, always discuss time and space complexity to show depth beyond syntax.

This question evaluates your grasp of inferential statistics and hypothesis testing in Python. The t-value measures how far the sample mean deviates from the population mean relative to sample variance. Compute it as:

t = (mean - population_mean) / (std / √n).

import numpy as np

def t_value(df, mu0):

x = df['var']

return (x.mean() - mu0) / (x.std(ddof=1) / np.sqrt(len(x)))

Tip: Even if not asked to compute p-values, mention that smaller p-values would indicate a statistically significant difference.

8 . Write a function to compute the interquartile range (IQR) from an unsorted list of numbers.

This question tests your understanding of spread and percentile computation. Sort the array, find the 25th and 75th percentiles using NumPy’s percentile() function, then subtract Q1 from Q3.

import numpy as np

def find_iqr(nums):

q1, q3 = np.percentile(nums, [25, 75])

return q3 - q1

Tip: The IQR is less sensitive to outliers than standard deviation, so emphasize its importance in robust statistical summaries.

9 . Simulate a truncated normal distribution given a percentile threshold, mean, and standard deviation.

This question evaluates your understanding of probability distributions and simulation techniques. Use scipy.stats.truncnorm to simulate random values from a truncated normal distribution. Specify truncation points as standardized z-scores.

from scipy.stats import truncnorm

def truncated_dist(percentile_threshold, m, sd, n=1000):

a, b = (percentile_threshold - m) / sd, np.inf

return truncnorm.rvs(a, b, loc=m, scale=sd, size=n)

Tip: Truncated distributions are useful when modeling constrained variables, like bounded customer spend or physical limits.

Behavioral and Soft Skills Questions for Data Analysts

Technical skills get you noticed, but soft skills often seal the offer. Data analysts work across teams, so interviewers look for strong communication, collaboration, and problem-solving abilities.

These questions reveal how you think, prioritize, and work under pressure. Good answers combine a clear story, your role, and the measurable impact of your work.

Use the STAR method (Situation, Task, Action, Result) to stay focused and concise.

Tip: Don’t just talk about the data, highlight how your insights helped the business, your team, or a customer. It shows that you think beyond the code.

Read more: Data Analyst Behavioral Interview Questions & Answers

Describe a data project you worked on. What were some of the challenges you faced?

This question evaluates your end-to-end problem-solving and project management ability. It’s meant to see how you approach challenges, adapt, and deliver results. Start by outlining a project (e.g., customer churn analysis or dashboard automation). Explain the specific hurdles — missing data, unclear requirements, or stakeholder alignment — and how you solved them. Highlight the result: faster reporting, actionable insights, or measurable improvements.

Example:

“While analyzing customer churn, I found inconsistent timestamps across data sources. I built a preprocessing script to standardize formats, which improved model accuracy by 12%. The project later helped retention teams target high-risk users more effectively.”

Tip: Focus on how you handled ambiguity or technical obstacles — interviewers want to see resilience and initiative.

Describe an analytics experiment that you designed. How were you able to measure success?

This question tests your analytical thinking and experimentation mindset. Discuss how you formed a hypothesis (e.g., “Will email timing affect click-through rates?”), defined control and test groups, and tracked metrics like conversion or engagement. Use Python or SQL examples if relevant, and mention how you determined statistical significance (A/B testing or confidence intervals).

Example:

“I ran an experiment to test how discount timing influenced purchases. Using Python and SQL, I split users into two segments and tracked conversions. The test showed that early-week offers increased purchase rates by 9%, which informed our campaign calendar.”

Tip: Tie your answer back to business outcomes. Interviewers care about how your analysis influenced a decision, not just the numbers.

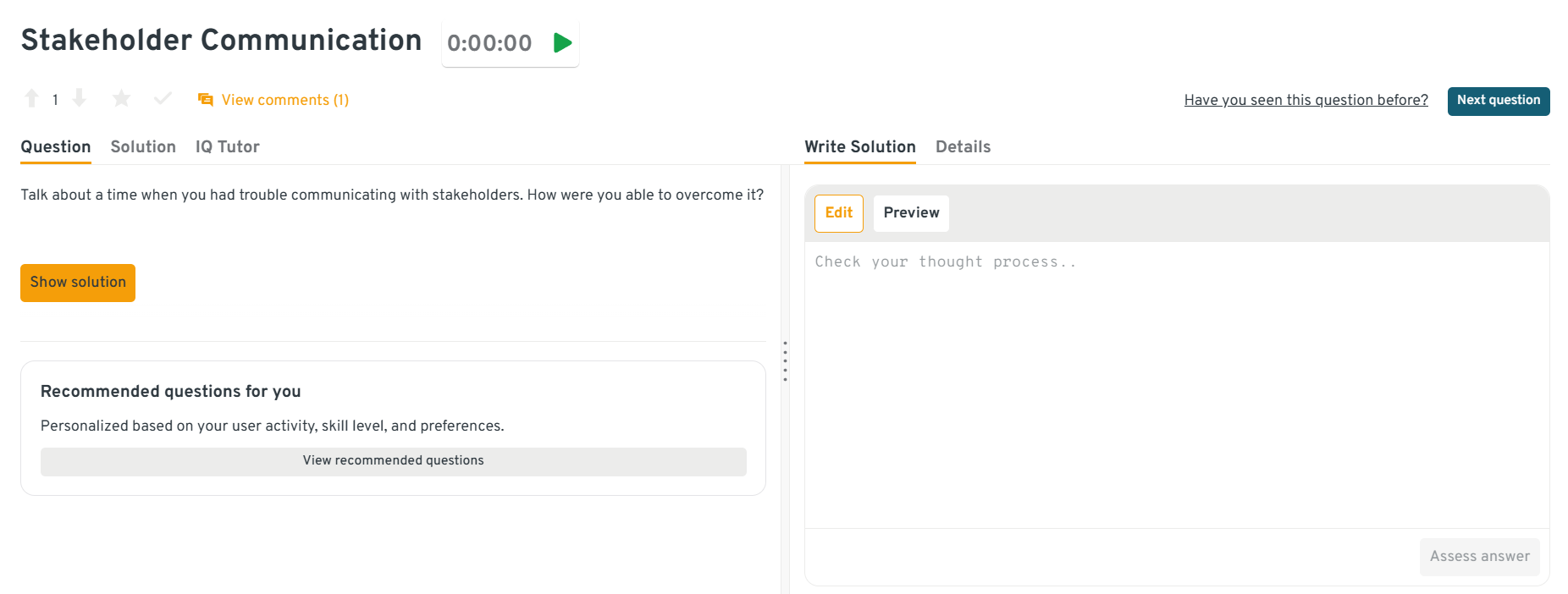

-

This question assesses your communication and empathy in a cross-functional environment. Explain a real situation where technical jargon or conflicting priorities caused confusion. Describe how you clarified expectations through visuals, simplified reporting, or aligned on definitions.

Example:

“In a sales dashboard project, my initial SQL queries didn’t align with how the sales team defined ‘active customers.’ After a review session, I built a glossary and used visual mockups to ensure we spoke the same language. This reduced revision cycles and improved accuracy.”

Tip: Demonstrate that you listen, clarify, and adapt your communication to your audience, these are key traits for senior analyst roles.

You can explore the Interview Query dashboard that lets you test yourself on real-world interview questions in a live environment. Practice this behavioral question to get instant feedback and learn how you can structure your answer for your next interview.

What are effective ways to make data accessible to non-technical people?

This question tests your data storytelling and democratization skills. Mention strategies like using dashboards (Tableau, Power BI), simplifying metrics, or automating recurring reports with Python. Visualizations and clear labeling make data easier to interpret.

Example:

“I built an automated Tableau dashboard for our marketing team showing weekly campaign performance. I used color-coded KPIs and tooltips instead of raw SQL outputs, which helped non-technical users make quicker decisions without data team support.”

Tip: Show that you think about usability — accessible data builds trust and enables decision-making across teams.

Tell me about a time your analysis led to a measurable business improvement.

This question evaluates your ability to connect analysis to business results. Talk about an insight that changed a process, campaign, or decision. Be specific — quantify the improvement when possible.

Example:

“In an ad spend optimization project, I identified channels with low ROI using a regression analysis. Redirecting 20% of the budget to high-performing channels improved overall conversion by 15%, saving about $50K monthly.”

Tip: Always include measurable results, numbers make your story credible and impactful.

How do you prioritize tasks when working on multiple projects with tight deadlines?

This question tests your organization and time management skills. Discuss frameworks like Eisenhower Matrix or impact vs. effort scoring. Explain how you communicate priorities with stakeholders to avoid confusion and rework.

Example:

“I usually prioritize projects by business impact and urgency. For overlapping timelines, I confirm expectations with stakeholders, document milestones, and automate repetitive tasks to stay efficient.”

Tip: Show that you balance speed with accuracy — analysts who can deliver on time without sacrificing quality are highly valued.

Tell me about a time you received critical feedback. How did you respond?

This question reveals your growth mindset and self-awareness. Choose a real instance where you learned from feedback — for example, unclear documentation or overcomplicating analysis. Explain how you took it constructively and improved your process.

Example:

“Early in my role, a manager noted that my SQL queries were efficient but hard to interpret. I started adding inline comments and summaries to make my code collaborative. That small change improved team reviews significantly.”

Tip: Interviewers value analysts who handle feedback with curiosity and professionalism — it shows maturity and team readiness.

Building and Presenting a Data Analyst Portfolio

Your portfolio is your best proof of what you can do with data. It’s not just a collection of notebooks or dashboards, it’s a story of how you think, analyze, and communicate insights. In a data analyst interview, a strong portfolio can be the difference between being qualified and being memorable.

Below is a structured way of how to plan, present, and talk about your portfolio effectively.

Read more: How to Create a Stand Out Data Analyst Portfolio

Choosing the Right Projects for a Data Analyst Portfolio

Interviewers look for quality over quantity. Aim for 2–3 high-impact projects that demonstrate a range of data skills like cleaning, analysis, visualization, and storytelling. Pick projects that align with the job you’re applying for.

Key project types to include:

- Data cleaning & preprocessing: Handling missing values, outliers, and inconsistent data (e.g., cleaning a Kaggle dataset or company sales data using Pandas).

- Exploratory Data Analysis (EDA): Identifying trends, anomalies, and correlations (e.g., analyzing customer churn or COVID-19 data).

- Visualization & reporting: Dashboards using Matplotlib, Seaborn, Tableau, or Power BI.

- SQL and database querying: Extracting and joining tables to answer business questions.

- Predictive or statistical modeling: Optional for data analyst roles, but useful if you’ve used regression or hypothesis testing to support recommendations.

Tip: Choose projects that show impact, e.g., “My analysis reduced processing time by 40%,” or “I identified 3 key factors that correlated with churn.”

Structuring Each Data Analyst Portfolio Project

Each portfolio project should follow a clear structure that makes it easy for interviewers to understand your workflow. Think of it like a data story with a beginning, middle, and end.

Use this framework:

| Stage | Description | Example |

|---|---|---|

| Problem Statement | What question are you trying to answer? | “Which customer segments contribute most to monthly revenue?” |

| Data Source | Where did the data come from, and how did you prepare it? | “Extracted raw data from a SQL database and cleaned it using Pandas.” |

| Tools & Libraries | List the main technologies used. | “Python, Pandas, Matplotlib, Seaborn, SQL” |

| Approach & Analysis | Describe your thought process and steps. | “Performed EDA, created correlation plots, and grouped data by region and segment.” |

| Insights & Results | Present your findings clearly and visually. | “Top 10% of customers drove 60% of total revenue.” |

| Impact or Takeaway | Tie the result back to business relevance. | “Recommended targeted offers for high-value customers.” |

Tip: Treat your Jupyter Notebook or project report like a guided walkthrough with well-commented code, logical section breaks, and brief explanations between steps make your work readable and professional.

Hosting and Presenting Your Data Analyst Portfolio

Your presentation medium matters as much as your analysis. Employers often review portfolios before an interview, so make sure it’s accessible and polished.

Best options:

- GitHub: Host Jupyter Notebooks, SQL scripts, and READMEs with explanations.

- Portfolio website or blog: Platforms like Notion, Wix, or GitHub Pages can showcase projects visually.

- Kaggle or Tableau Public: Great for interactive dashboards and competitions.

- LinkedIn: Post short write-ups of your projects; recruiters often find analysts through LinkedIn activity.

Tip: Always include a short summary at the top of your project repo—busy reviewers might not scroll through your whole notebook, so grab attention early.

Talking About Your Portfolio Projects in Data Analyst Interviews

When interviewers ask about your portfolio:

- Pick one project that best matches the role.

- Walk through it in chronological order using the STAR framework (Situation, Task, Action, Result).

- Emphasize your decisions: why you chose certain metrics, visualizations, or libraries.

- Mention what you’d do differently next time to show reflection and growth.

Example Response:

“For my ‘Customer Retention Dashboard,’ I used SQL and Pandas to merge multiple data sources and visualize monthly churn. I found that users who engaged with two or more product features in their first week were 40% less likely to churn. The marketing team later used this insight to redesign onboarding emails.”

Tip: Speak like you’re telling a story and make your audience feel the problem and see the impact. That’s what transforms data into influence.

Presenting Your Case Study in a Data Analyst Interview

Some interviews involve a live or take-home case study where you walk through your analysis or discuss one of your projects in depth. This is your chance to show storytelling, reasoning, and business sense, and not just technical skill.

How to structure your case study presentation:

- Start with context: “My goal was to identify which product categories were most profitable.”

- Summarize your data: “The dataset included 120K records across five years with missing values in the ‘discount’ column.”

- Explain your approach: “I cleaned the data, ran exploratory analysis, and used visualizations to understand patterns in sales by region.”

- Present visuals or insights: Include 2–3 clean, easy-to-read plots that highlight trends or comparisons.

- Discuss impact and next steps: “Based on the analysis, we recommended reducing discounts in low-margin categories, which improved profit by 8% the following quarter.”

Tip: Keep your slides or visuals simple with one idea per chart. Focus more on your reasoning than your code.

Common Mistakes to Avoid While Building Your Data Analyst Portfolio

- Including too many small or unfinished projects

- Not documenting your workflow or assumptions

- Using overly complex visuals that obscure your insight

- Forgetting to highlight the business value of your findings

- Ignoring version control or reproducibility

Tip: A small number of well-polished, relevant projects always beats a long list of half-finished ones.

A great portfolio tells a cohesive story and shows that you can not only code but think critically, communicate insights, and drive results. Whether it’s a data cleaning project or a predictive model, make sure every project demonstrates clarity, curiosity, and business impact—the three traits that define an outstanding data analyst.

How to Prepare for a Python Data Analyst Interview

Preparation is your biggest advantage in a Python data analyst interview. Interviewers want to see not just technical skill, but also clarity, reasoning, and confidence. Here’s how to prepare effectively and what to avoid.

- Review the fundamentals: Refresh Python basics such as data types, loops, and functions—and key libraries like Pandas, NumPy, and Matplotlib.

- Practice with real data: Use datasets from Kaggle or public APIs to simulate real business problems. Practice cleaning, aggregating, and visualizing data.

- Revisit your projects: Be able to explain what problem you solved, why you chose a certain approach, and what insights you found.

- Mock interviews: Practice thinking out loud. Explaining your process helps interviewers follow your logic, especially in remote settings.

- Time management: Practice solving problems under time limits so you can stay calm and structured during live coding rounds.

Tip: Build a short “interview notebook” where you summarize your go-to Pandas functions, syntax reminders, and common data transformations.

Common Mistakes to Avoid (and How to Fix Them)

Even the most skilled data analysts can stumble in interviews if they focus too much on coding and overlook communication or context.

Here are the most common pitfalls candidates make and how to fix them.

| # | Mistake | Why It’s a Problem | How to Avoid / Fix It | Example / Tip |

|---|---|---|---|---|

| 1 | Jumping Straight Into Code | You might waste time coding the wrong solution or miss key assumptions. | Pause, clarify the task, and restate the problem before coding. | “So, you’d like me to group the data by region and calculate the mean sales, excluding missing values, is that correct?” |

| 2 | Ignoring Data Quality Issues | Running analysis on dirty data signals carelessness and leads to wrong conclusions. | Always check for missing values and outliers before analysis. | Use df.info() or df.isnull().sum() and explain your reasoning. |

| 3 | Giving One-Line Answers | Short answers show correctness but not understanding or communication skill. | Expand with examples or reasoning to show comprehension. | “A list can be changed, like appending elements, while a tuple is fixed, useful for storing coordinates.” |

| 4 | Overusing Technical Jargon | Non-technical interviewers may not follow, weakening your impact. | Use simple, clear language when explaining technical steps. | “We filled missing values and removed extreme outliers to ensure data accuracy.” |

| 5 | Forgetting to Connect to Business Value | Over-focusing on tools or code detaches your work from real-world outcomes. | Always tie your answer back to the business impact. | “The analysis helped the marketing team identify top regions and optimize budgets.” |

Tip: Interviewers don’t just evaluate your technical accuracy; they assess how you think, communicate, and make decisions with data. Great analysts don’t rush to code; they clarify problems, question assumptions, and explain trade-offs clearly.

Show that you understand context, connect your technical work to real outcomes, and think like a problem-solver who can translate analysis into impact. When in doubt, slow down, explain your reasoning, and tie every answer back to business value.

FAQs: Python & Data Analyst Interview Questions Answered

What are the most common Python questions asked in data analyst interviews?

You’ll usually get a mix of Python fundamentals and data manipulation tasks. Expect questions on:

- Data types (lists, dictionaries, tuples)

- Loops, conditions, and functions

- Pandas operations like

groupby(),merge(), andfillna() - Basic statistics and data visualization

How do I prepare for both technical and behavioral rounds?

Split your prep into two parts:

- Technical: Practice Python coding, data cleaning, and problem-solving with real datasets.

- Behavioral: Use the STAR method (Situation, Task, Action, Result) to explain past experiences and teamwork stories clearly.

Which Python libraries should I focus on for data analysis?

Start with Pandas (data manipulation) and NumPy (numerical operations). Then, explore Matplotlib or Seaborn for visualization and Scikit-learn if the role touches light machine learning or prediction tasks.

How do I present my portfolio or projects in an interview?

Choose 2–3 strong projects that show different skills, like data cleaning, analysis, and visualization.

Keep your explanation short:

“I analyzed e-commerce sales data using Pandas and visualized trends with Seaborn to help optimize pricing strategy.”

Host your work on GitHub or a portfolio site with clear documentation.

What are some common mistakes to avoid in Python interviews?

- Jumping into code without understanding the question

- Ignoring missing data or edge cases

- Using jargon without clear explanations

- Focusing only on code instead of the insights it produced

Are remote or virtual interviews different for data analyst roles?

The structure is the same, but communication matters more.

Speak clearly, share your screen confidently, and explain your steps while coding. Test your setup beforehand to avoid technical issues.

Conclusion

Python sits at the core of every great data analyst’s toolkit. From cleaning data to uncovering insights, your ability to think and communicate in Python sets you apart in interviews.

As you prepare, focus on understanding and not memorizing. Practice real-world problems, review your projects, and be ready to explain why your solutions matter.

With these 50+ questions, examples, and tips, you now have a clear roadmap to build confidence and show interviewers that you can turn data into impact. Great analysts don’t just analyze data, they tell stories with it. Use Python to do both.

Tip: The best candidates don’t rush. They ask clarifying questions, explain their logic, and tie their answers back to real business outcomes—that’s what turns a good interview into a great one.

Ready to nail your next Data Analyst interview?

Start with our Data Analyst Question Bank to practice real-world scenario questions used in top interviews, questions. Sign up for Interview Query to test yourself with Mock Interviews today.

If you want to explore company-specific hubs, browse our tailored hubs for top companies like Meta, Google, Microsoft, and Amazon. Pair with our AI Interview Tool to sharpen your storytelling.